This year on my team, we had a moment where we realized that our UX quality was slipping. It started with a few surprising feedback tickets, then we noticed some patterns in our dovetail user research sessions, then got some rather harsh NPS scores, until finally we realized we needed to quantify our UX quality to really see what was going on. This story is how we are doing that now.

First I’ll say that while we care deeply about quality, but like many teams, we struggle to explain the ROI of design to leadership, especially given we’re an Enterprise B2B. We tend to sadly skew a bit too high on the ‘feature factory’ end of the spectrum, so we knew that this turnaround was not going to be fast or easy, but would be slow, met with skepticism, and require lots and lots of presentations 🙃.

Sound familiar to other design leads out there? I bet. Here’s what we’re doing.

Step 1 – Gain Allies

As with any large initiative, I try to get a lot of people to fall in love with the problem with me first. I show them raw ideas and get them to come to a conclusion on their own that ‘something must be done!’ Once you have a group of cross-functional allies, or cheerleaders, that agree this is a problem worth solving, then your message and ideas spreads more easily.

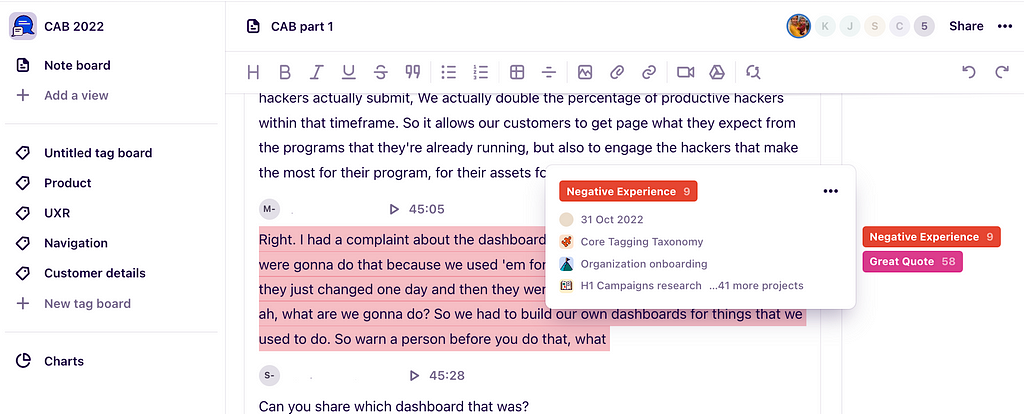

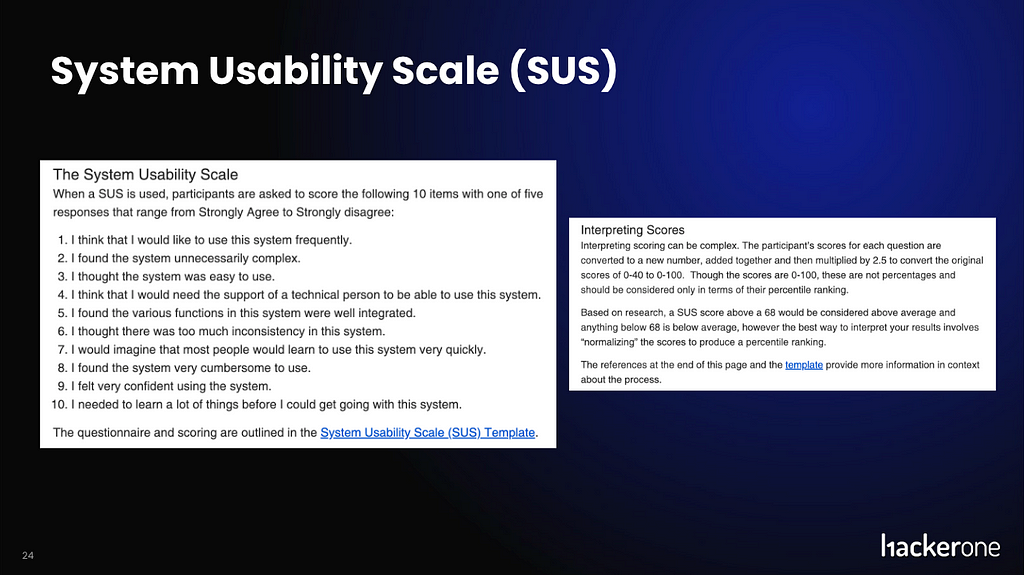

In my case, I wanted to start with engineering and product leadership. I gathered as much ‘low hanging’ data as I could. As mentioned above, I synthesized all the qualitative data we had, as well as ran an internal SUS study with the product and engineering teams, to get a barometer of what we ourselves felt about our product’s UX. (Although we ‘are not our users’, that was a quick way to get an important signal early on.)

SUS results — The first 2 questions are SUS, the 3rd is a bit of an internal process reflective question:

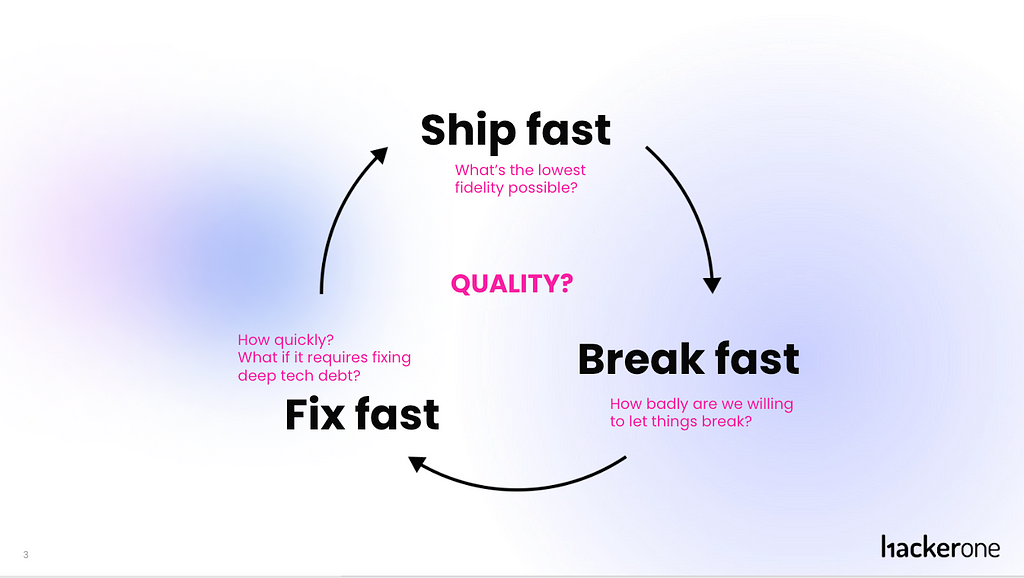

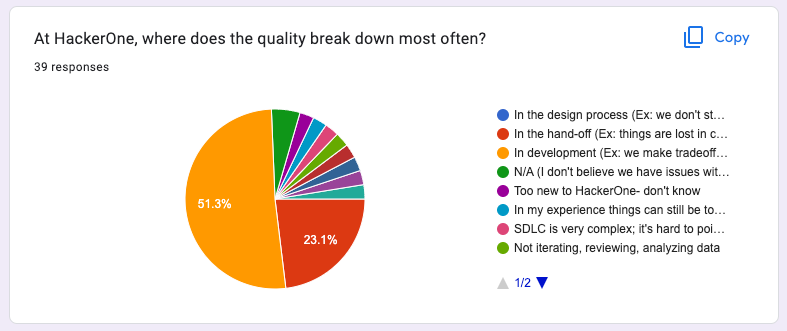

With the engineering leads we also examined our ethos of ‘ship fast, break fast, fix fast’ and discovered together that quality control was being overlooked (or on some squads non existent), and it certainly wasn’t standardized across the org.

Here’s a slide from one of many presentations 😝:

Step 1 was done — we agree that our quality is below where we want it to be.

Step 2— Find the root cause of the problem

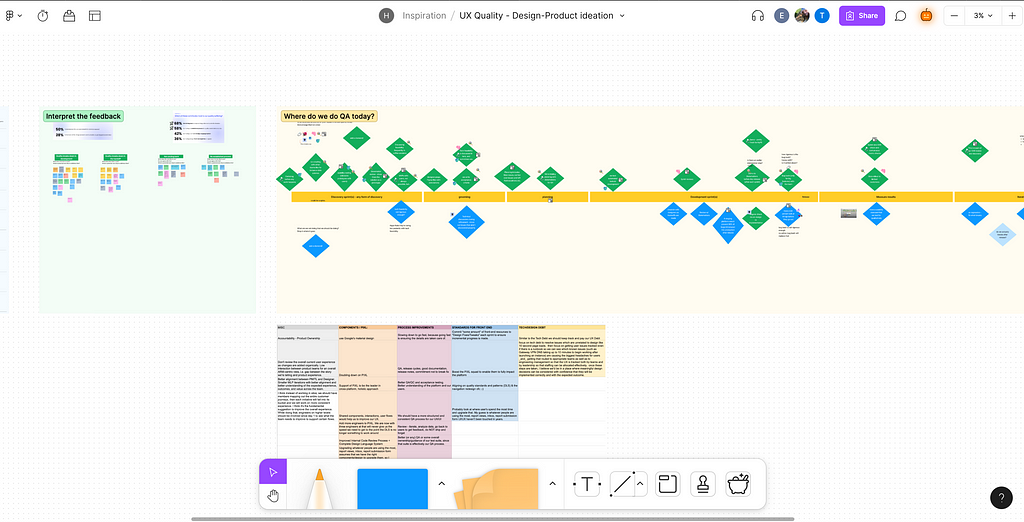

At this point I had my allies, I had some stakeholder approvals to explore, and now I needed to find out how bad it really was. For this I conducted internal workshops with each squad where we asked, ‘How do we ensure quality today?’ I love me a good FigJam ;)

We discussed what we do today that works, what doesn’t work, and what areas we need to improve upon. (Note: we have no QA team and have no plans to hire for that position 🙃)

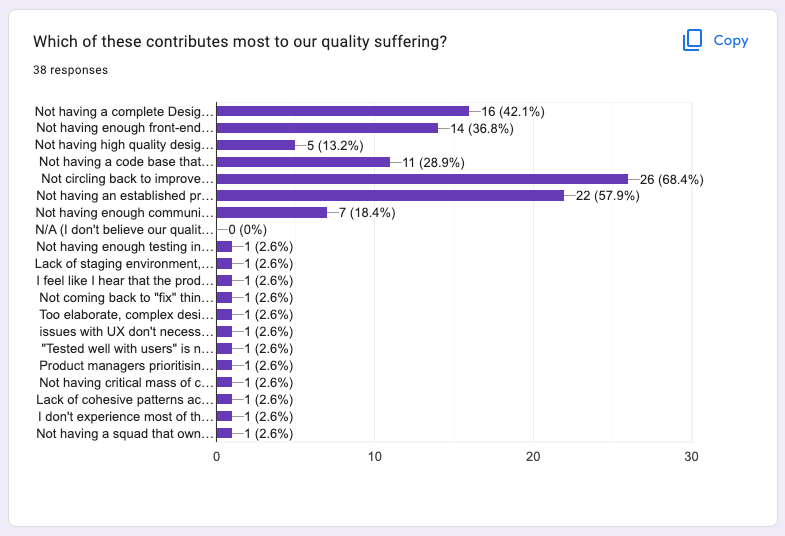

So far we’ve done a few workshops and already have some key synthesized findings:

- We have no one definition of quality

- Every squad has their own rituals, that play to their strengths, but aren’t written down

- Three things that hold us back, besides process are tech debt, limited DLS components, and lack of front end expertise on each squad.

Some images from a survey that went along with the workshops:

Step 3 — Measure what matters

So now it’s time to address that $50,000 question ‘What is UX quality?’. Seriously, how do we define it? How will we measure it? Thankfully, we are not reinventing the wheel here. There are plenty of methods out there. It’s just a matter of choosing the one that works for us. What method will get us to our end goal? How hard will they be to collect? How accurate are they? How often must we run them? So I presented a few options:

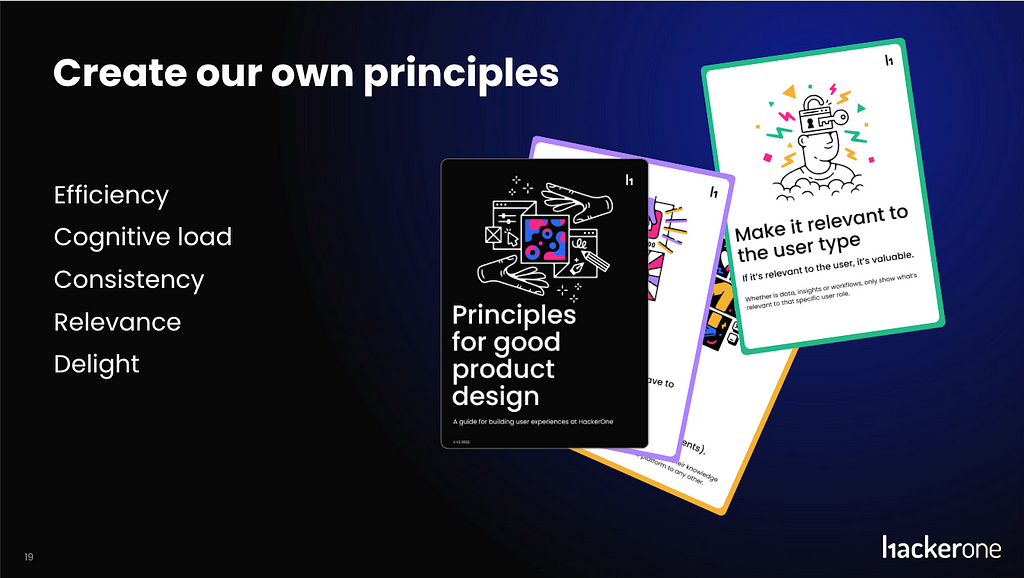

- Create our own principles

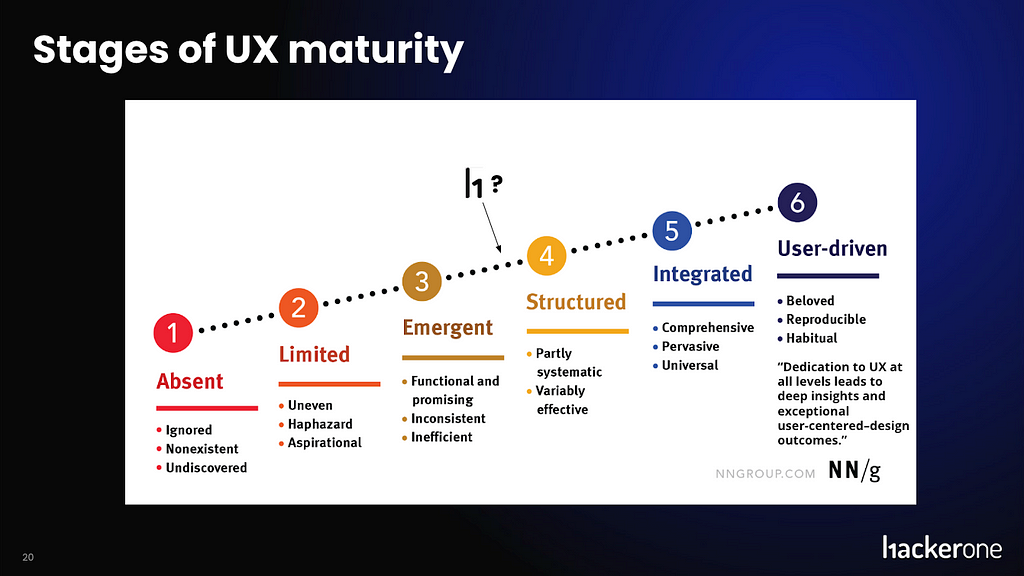

- Have a UX maturity target

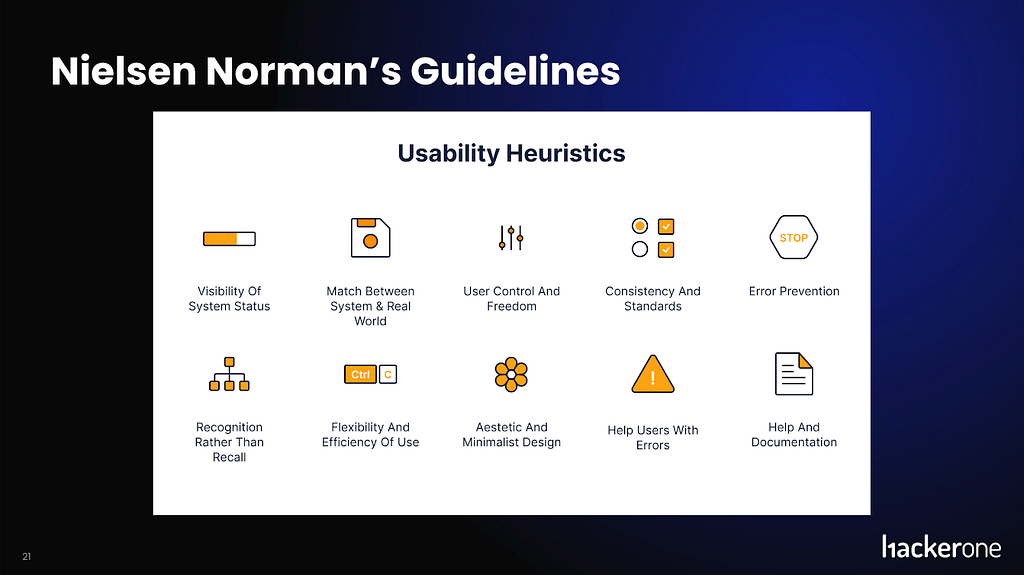

- Neilson Norman “Usability Heuristics” Principles

- Hierarchy of user needs

- H.E.A.R.T.

- SUS (System usability score)

Let’s go over these 1 by one in a bit of detail.

- We could create our own principles, based on specific usability needs of our exact personas, and use these gorgeous cards in strategic moments in our handoff process. (Ex: in design crit, or in final QA after we inevitable made tradeoffs) A fellow designers on my team made these cards and he’s sending them out to everyone this month!

2. We could evaluate ourselves against the NN/g 6 states of UX maturity, and have a shared goal of becoming a ‘6’, which means “Dedication to UX at all levels leads to deep insights and exceptional user-centered–design outcomes.”

3. We could go VERY basic and baseline and say that we just have to meet the NN/g Usability Heuristics Guidelines. I would argue this is not enough if you are truly a human-centered org, but a good baseline nonetheless.

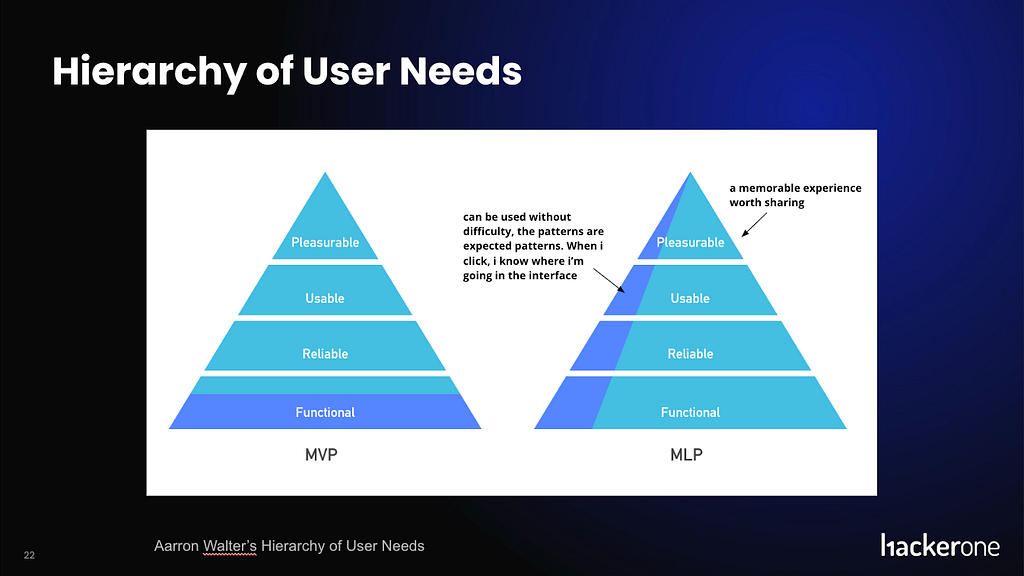

4. The Hierarchy of User Needs is a great tool for orgs that have issues with creating MVPs and never circling back for later refinement. This frameworks forces the org to have metrics of success for all MVPs and an action plan for when you do achieve the metric (PMF, or what have you) you know how to proceed towards an MLP (minimum lovable product). The goal of an MLP must be specific and you must pre-define what level of ‘usable’ and ‘pleasurable’ you are striving for, ahead of time.

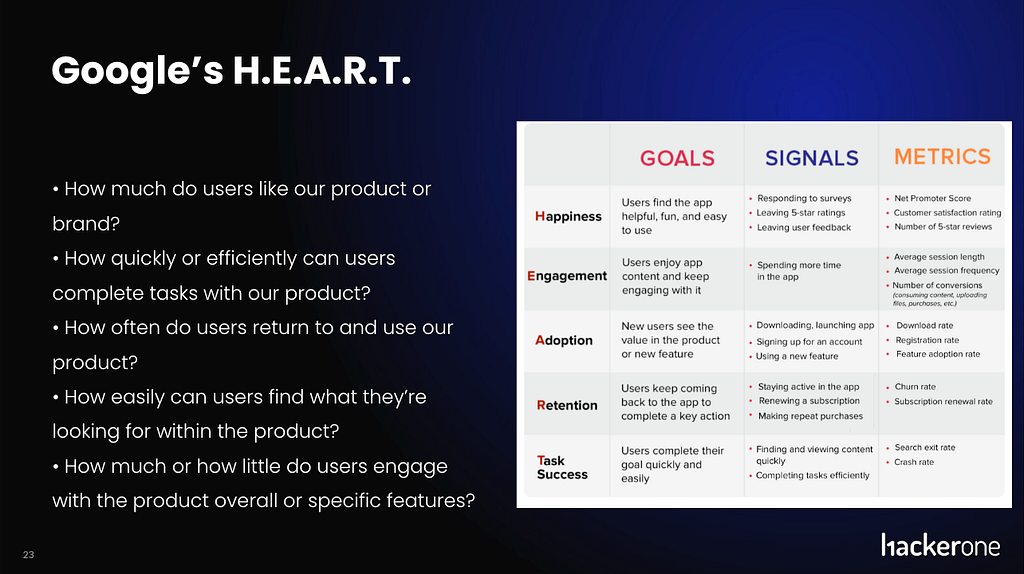

5. You could go extremely robust and follow Google’s H.E.A.R.T. for which there are many other medium posts about :)

6. Or finally (for my list at least) You could implement the tried and true System Usability Scale. And use the 10-question survey to get a more generic usability score. This can be highly effective if you run it periodically over time after large releases.

We have yet to pick one, but i’ll post again once we do!

Step 4— Get a baseline

So this bring us to today. After considering these 6 ways of measuring UX, we will soon choose one and embark on getting a baseline metric. Without a dedicated UXR team (or even person) it’s hard to accomplish a robust user metric like this. We don’t want to conflict with our annual NPS survey and we have many other feedback initiatives and usability testing constantly going on. So how do we weave this in? Check back for my next article to read how we do it!

Additionally, since we’re already armed with our qualitative user quotes, NPS data, and internal survey results, we know that we can already start improving our processes, so we will not hesitate there. We will standardize certain components of our agile process to codify quality along the way.

Logistical notes: I work at a 450 person company with a roughly 100 person product, design, engineering group, so some of these ideas may be right-sized to that scale. We have 11 engineering squads, and 9 IC designers.

Thank you all for reading this article. I absolutely love being a lead designer and getting to take on these types of meaty people + process improvement topics.

How does your team measure UX quality? was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.