Designing for safe and trustworthy AI

Why human oversight to make up for AI errors doesn’t work and what we can do instead

As much as AI is powerful, in some cases it can be misleading or wrong. A realization that came too late to a New York lawyer, who is now facing a court hearing on his own after using ChatGPT to research similar cases [6]. ChatGPT came up with some cases, even providing quotes and citations. Unfortunately for the lawyer, all these cases, quotes and citations were invented by ChatGPT. Even more remarkable is, that the lawyer even asked ChatGPT if these were real cases and what the sources were, to which ChatGPT confidently agreed and named sources — legit sources that, however, never contained any of the content ChatGPT came up with.

Current AI systems seek to mitigate AI mistakes by requiring human oversight, by keeping the human in the loop and relying on them to detect and fix the AI output if needed. The story of the unlucky New York lawyer and others, however, teach us that this approach doesn’t work. If an AI, like ChatGPT, is right most of the time, it is really hard as a human to judge if it is right or wrong in a particular case. And just adding a small disclaimer pointing out that the AI “may produce inaccurate information,” as done by ChatGPT apparently doesn’t prevent people from falling for the AI’s hallucinations. It also puts the human in the unpleasant position of taking all responsibility for an output or action that was mainly determined by the AI.

While this approach may (or may not) suffice for low-stakes scenarios where errors carry minor consequences, we must exercise greater caution and deliberation when deploying AI in high-stakes situations. Consider the risks of AI suggesting an incorrect treatment to a doctor, guiding a plane full of passengers into a storm, or recommending an innocent person for imprisonment by a judge. In such critical domains, we need to be more considerate and vigilant.

Why human oversight to make up for AI errors doesn’t work

Academic researchers, including myself, have investigated the challenges and risks of human-AI interaction, elevating a set of problems we should keep in mind when designing for AI at high-stakes.

- AI is not transparent

Most AI algorithms and their output are black boxes for the end user, with no insights into the inner workings of the AI and why the AI has arrived at a certain output [7]. This makes it hard to determine when the user should follow an AI’s recommendation and when they shouldn’t. It also doesn’t allow them to get the bigger picture and context of the AI’s output. - Displaying AI explanations and a confidence score can cause over-reliance

Several studies [e.g. 1,2] have shown that displaying AI explanations or the AI’s confidence score often don’t help the user to recognize when not to trust the AI. It can even lead to over-reliance, because the system seems more transparent and trustworthy but actually isn’t. - People act more risky when supported by AI

Researchers have also shown that people are inclined to take more risks when they are supported by AI and that they were even acting more risky in cases where the AI wasn’t even helpful [3]. - AI support leads to less cognitive engagement

Similar to how most drivers aren’t paying attention to the road while driving (semi-)autonomous cars, even though they are supposed to [8], people are also less cognitively engaged in their task when supported by AI systems [4]. This results in issues when the human is required to take-over when the AI is failing, since they aren’t aware enough of the situation. - Users might not want to use an AI system in a high-stakes situation if they aren’t familiar with it

Users at high-stakes are already under high stress, needing to get familiar with an unknown AI system in that situation just increases their stress level or might lead to neglecting the system after all [5]. - Judging AI output takes time and mental capacity

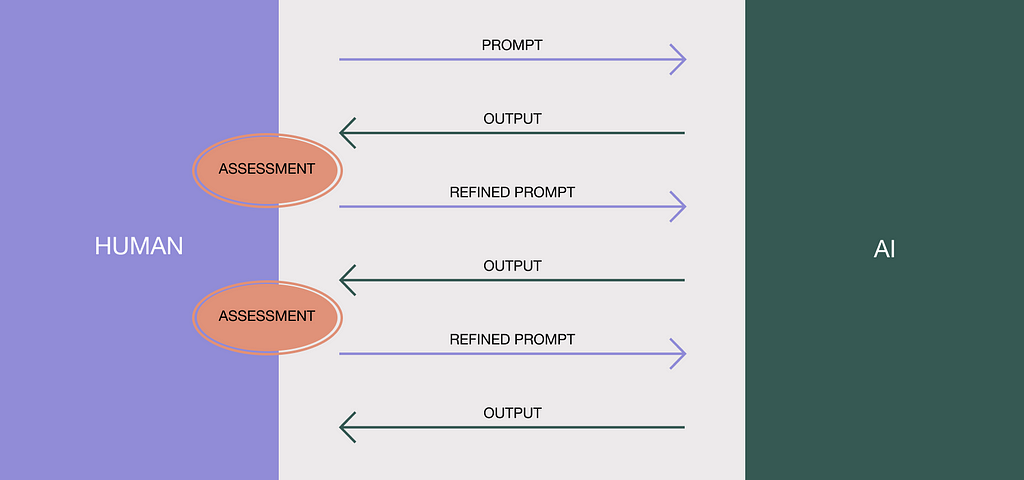

Our interactions with generative AI, like ChatGPT or Midjourney, are turn-based. The human defines a prompt, the AI responds, the human judges the output and refines, and the process iterates until the desired output is achieved. This back and forth can go for a long time. And this not only takes time, but each time the human needs to assess the AI’s output it also takes mental capacity. Mental capacity and time a pilot or doctor might not have in a high-stakes situation. An airplane pilot I had interviewed for my research put it into the following words:

“ If I would use the system with this approach, I wouldn’t use it at all. […] Then it occupies me more than taking the decision myself.” – Pilot

From human oversight to human in the centre

Integrating AI into our workflows, particularly in high-stakes scenarios, demands thoughtful consideration and caution. We must resist merely imposing AI capabilities on users who then have the responsibility to oversee the AI. We need to instead take a holistic approach, designing for the problem space and not only the moment of the human-AI interaction.

During my research project with the European Aviation Safety Agency (EASA) on certifying AI for aviation, my colleague Tony Zhang and I focused on designing an AI-based diversion support system to aid pilots in deciding where to land during emergencies.

Taking a more comprehensive view of the problem, we discovered that pilots continuously evaluate their options for potential emergencies during normal flight operations, enabling them to have a solution ready when an emergency arises. Shifting our focus from the moment of decision to the decision-making process allowed us to design for normal flight operations rather than emergency cases. This change in approach presented new design opportunities that also address other issues concerning human oversight of AI, shifting towards a more human-centered approach. An approach, which is taking the human context, needs and workflows into account.

“Shifting our focus from the moment of decision to the decision-making process allowed us to design for normal flight operations rather than emergency cases.”

Design Guidelines

- Augment the process, don’t present the solution.

Using AI to augment the decision-making process empowers users to make informed choices without the burden of judging AI suggestions. This reduces work and mental load in high-stakes situations, granting users more agency and control.

In the example of AI-based diversion support, we integrated an AI to enhance pilot situational awareness during regular flight operations. By doing so, pilots are better prepared to make optimal decisions during emergencies, avoiding the need to assess AI suggestions in critical moments. This proactive approach ensures pilots remain in control, fostering safer and more efficient outcomes. - Build trust through familiarity.

To establish trust and proficiency in using an AI system during high-stakes situations, users must first become familiar with it. This necessitates integrating the AI into low-stake scenarios within the users’ everyday workflow, where they possess sufficient mental capacity to observe the AI’s behaviour and learn to interact with it.

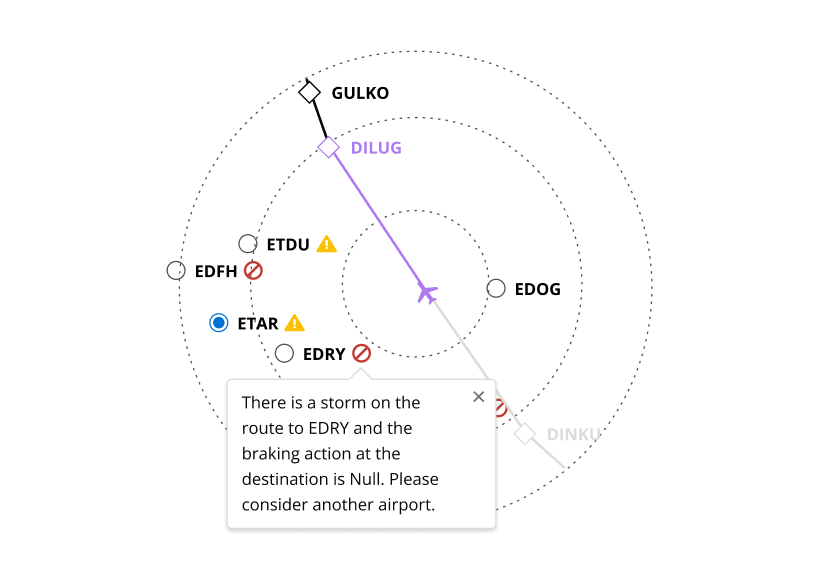

Rather than introducing entirely new AI systems, we can integrate AI into the systems and workflows users are already familiar with. Auxiliary AI support can be employed to provide users with a sense of the AI’s capabilities without requiring heavy reliance on it or drastic changes to their work processes. For instance, in the case of the AI-based diversion support system, we display the AI’s risk evaluation alongside NOTAMs (Notice to Air Missions), which pilots typically read for airport information.

By offering these low-risk entry points, we enable users to gradually acclimate to the AI, making their interaction with the AI safer and more effective for use in high-stakes scenarios. This approach fosters a smoother transition to AI adoption, promoting user confidence and successful integration of AI into critical operations. - Support understanding through exploration.

In order to safely and responsibly interact with an AI, users need to build mental models of how these systems work. People better understand when they actively interact and explore rather than only perceive and consume explanations and information. It’s learning by doing. However, users can’t explore and investigate freely at high-risk, therefore we need to give them opportunities to do so at low-risk.

In the case of the AI diversion support system, we integrated a “simulate emergency” feature. When activated during a regular flight, it displays the AI’s evaluation of the surrounding airports concerning a specific emergency type, e.g. a medical emergency. This proactive approach enables pilots to prepare for potential emergencies and take preemptive actions to position themselves better, such as adjusting flight paths to reduce distances to the nearest airports for the case that this emergency actually happens. Additionally, this feature encourages pilots to explore and interact with the AI, gaining insight into its behaviour for various emergency scenarios. It also helps them to create a mental model of the AI which will then help to use it safely in an actual high-risk situation.

In order to safely and responsibly interact with an AI, users need to build mental models how these systems work.

Looking forward

So, what could have helped the New York lawyer? First, we need to take into consideration that ChatGPT isn’t an actual tool for legal research, ChatGPT is just a (very good) large language model used for that purpose. Its interface, its interactions and its algorithm aren’t designed and implemented to do so, they are created to interact via natural language. I argue that at the current state of AI we can and need to create an interface layer on top of these AI tools. Interfaces that are carefully designed with the user’s purpose in mind and mitigates risks by thoughtfully embedding the AI into the user’s context. We must not only fine-tune models to specific use cases but also craft specialised interfaces tailored to particular contexts, all while proactively addressing potential AI-related risks. For instance, this might involve creating an AI-assisted legal research tool that enhances the accessibility and intelligence of legal resources and cases through the insights provided by the AI system.

I argue that at the current state of AI we can and need to create an interface layer on top of these AI tools. Interfaces that are carefully designed with the user’s purpose in mind.

I’m sure AI can be of great use for legal research today, if it is used through an interface that handles and presents sources responsibly and supports lawyers in a way that doesn’t encourage over-reliance. An interface which can be created through careful design, in a human-centered way.

Special thanks to Tony Zhang with whom I’ve conducted most of the research mentioned in this article.

Sources

[1] Gaube, S., Suresh, H., Raue, M., Merritt, A., Berkowitz, S. J., Lermer, E., … & Ghassemi, M. (2021). Do as AI say: susceptibility in deployment of clinical decision-aids. NPJ digital medicine, 4(1), 31.

[2] Fogliato, R., Chappidi, S., Lungren, M., Fisher, P., Wilson, D., Fitzke, M., … & Nushi, B. (2022, June). Who goes first? Influences of human-AI workflow on decision making in clinical imaging. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency (pp. 1362–1374).

[3] Villa, S., Kosch, T., Grelka, F., Schmidt, A., & Welsch, R. (2023). The placebo effect of human augmentation: Anticipating cognitive augmentation increases risk-taking behavior. Computers in Human Behavior, 146, 107787.Chicago

[4] Gajos, K. Z., & Mamykina, L. (2022, March). Do people engage cognitively with AI? Impact of AI assistance on incidental learning. In 27th international conference on intelligent user interfaces (pp. 794–806).

[5] Storath, C., Zhang, Z. T., Liu, Y., & Hussmann, H. (2022). Building trust by supporting situation awareness: exploring pilots’ design requirements for decision support tools. In CHI TRAIT’22: Workshop on Trust and Reliance in Human-AI Teams at CHI (pp. 1–12).

[6] Armstrong, K. (2023). ChatGPT: US Lawyer admits using AI for case research. BBC. https://www.bbc.com/news/world-us-canada-65735769

[7] Burt, Andrew (2019). The AI Transparency Paradox. Harvard Business Review. https://hbr.org/2019/12/the-ai-transparency-paradox

[8] Chu, Y., & Liu, P. (2023, July). Human factor risks in driving automation crashes. In International Conference on Human-Computer Interaction (pp. 3–12). Cham: Springer Nature Switzerland.

Designing safe and trustworthy AI systems was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.