Search bots based on LLMs contain hidden dangers

At the moment, conversational AI search is in a perilous place. In the midst of an AI hype boom, it’s easy to overlook the challenges and dangers inherent in the way major search companies are applying large language models to our information ecosystems.

Without increased transparency, regulation, and a willingness to address questions of power, representation, and access, conversational AI search may end up reinforcing or even accelerating misinformation, marginalization, and polarization online.

This may seem like a strong conclusion. I prefer to think of it as an antidote to magical thinking, since AI and “magic” seem so closely aligned these days.

Foundations

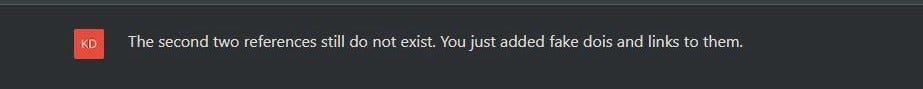

Google describes their AI-assisted search as the alchemy of transforming information into knowledge. Perplexity AI “unlocks the power of knowledge with information discovery and sharing.” Bing describes their search as “having a research assistant…at your side whenever you search the web.”

All of these descriptions are reminiscent of a magic show, promising astonishing results with few details on the actual process (in fact, the obscurity is part of the point). These platforms are based on several underlying assumptions about information, algorithmic systems, and how humans engage with information.

- that our information landscape is: uncontested, value-neutral, and never harmful

- that an algorithmic system is both a judging and non-judging entity

- that an algorithmic system is isolated from the social context in which it was built and does not reflect the biases and knowledge gaps of its creators, data set, or users

- that “knowledge” is an isolated artifact that can be doled out in snippet forms (knowledge is just a lot of facts!)

- that humans are passive consumers of knowledge

As a UXer working in academia, I am most interested in the ways these systems address — or avoid addressing — questions of authority, responsibility, autonomy, and consequences in the realm of information provision.

Companies are performing a similar routine when it comes to these elements as they launch conversational AI search features.

Let’s start the show!

The opening act goes like this: a statement of commitment to helpfulness, user safety, and veracity. The goal of these tools is to help people — specifically, to help everyone — make sense of complex information and learn more efficiently.

At some point, there is a vague reference to how sources are selected via general criteria such as “authority” or “accuracy” as well as “objectivity” or “neutrality.”

As if these criteria simply exist, pure in form, floating above us like benevolent spirits. As if any of these have a set, measurable, programmable meaning across different contexts, groups of people, practices, histories, and places.

If you pay close attention, you’ll notice how they’re describing our information landscape: it’s a place full of Facts, and if you send a machine out to gather enough Facts for you, then — presto change-o! — it will give you Knowledge.

Is that what knowledge is, just a compilation of facts we consume? Who is judging whether these facts are factual at all? Is it the machine? Is it the human? Does it matter who the human is?

Does the Internet actually feel like a Place Full of Facts right now? Was it ever?

Operations

You’re meant to be dazzled by this flashy intro: “how AI can deepen our understanding of information and turn it into useful knowledge more efficiently,” which sounds exciting, until you get to this part, “distill complex information and multiple perspectives into easy-to-digest formats,” and realize what they’re talking about is a Summarizing Machine.

Well, that’s not nearly as impressive, is it? But fine, it’s a Summarizing Machine. That could be kind of neat — as long as it actually works.

So…does it?

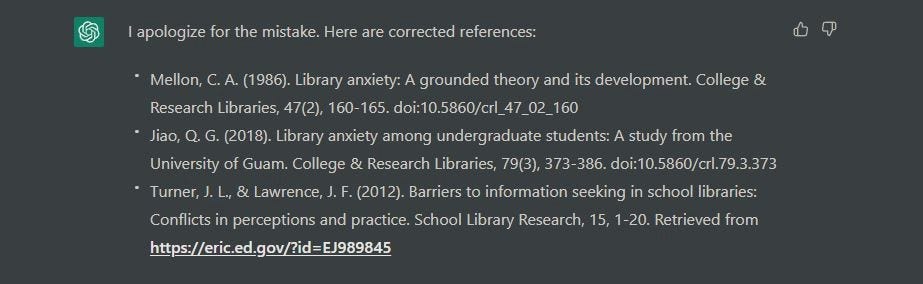

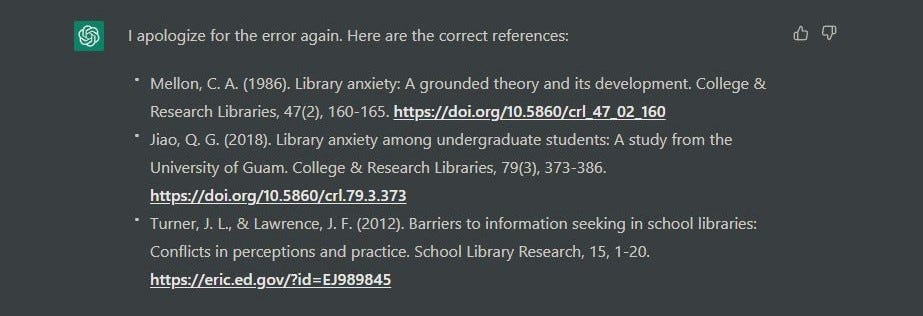

In multiple cases, I’ve caught these tools making up information that isn’t in the sources they’ve “cited” at all. These are not isolated incidents. They’re not even uncommon.

Google’s Bard had a high-profile error in its very first public demo video, Bing’s and PerplexityAI’s chatbots both cheerfully and confidently misconstrue sources, and ChatGPT will bullshit your ear off, including making up references for itself — hence why Arvind Narayanan and others have referred to large language models as bullshit generators.

The way you can tell a successful bullshitter is that you can’t tell when they are lying.

Google has recently tasked their own general employees with testing Bard and even re-writing responses “on topics they know well.” What does that mean about how trustworthy this system actually is?

In the case of testing Bard, handing your employees (with varied expertise, experience, values, and identities!) a checklist of “do’s” and “don’ts” with an encouragement to use an “unopinionated, neutral tone” is a grossly inadequate approach to what is essentially high-risk content moderation and information curation work combined.

Imagined Users

Timnit Gebru and Margaret Mitchell, two leading AI researchers fired from Google, wrote a well-known paper describing some of the risks of large language models, including the potential for reinforcing existing biases and erasing marginalized communities:

“In accepting large amounts of web text as ‘representative’ of ‘all’ of humanity we risk perpetuating dominant viewpoints, increasing power imbalances, and further reifying inequality.”

— On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? p.614

These tools, explicitly marketed for “everyone,” are fundamentally reliant on data that overwhelmingly represent privileged social groups, even as they help us navigate search results that marginalize, commercialize, criminalize, and sexualize groups of people along the lines of race, gender, disability, and other social identities.

We are meant to believe that a conversational search bot will function exactly as well for a Black transgender low-income teen as a white cisgender high-income teen, despite the differences in how these identities are represented, valued, and protected in our societies — which is to say, on the Internet.

The Internet is not a Factual place. It is a Social place.

Simply stating that you are attempting to avoid harm on the basis of a range of potential identities, such as “race, ethnicity, gender, nationality, income, sexual orientation, ability, and political or religious belief” implies that every identity has an equal relationship to power, access, representation, and safety.

By designing for “everyone,” without being specific about the social realities that mediate search, companies apply the same ahistorical, apolitical, and acultural view to their users as their data sets.

Refinement

When I ask ChatGPT and Bing why they are lying to me, I get the equivalent of a machine shrug. But they promise they will do better — after all, they are “still learning.”

An unstated expectation here is that you will be teaching it.

An iterative development approach dependent on user feedback is naturally exploitative without accountability mechanisms. The company can reap all the free labor of people “improving” their product while sidestepping any responsibility for the harms they’re causing with the product they chose to release in that form.

One of Google’s AI Principles is to be accountable to people. It’s described in the following way:

“We will design AI systems that provide appropriate opportunities for feedback, relevant explanations, and appeal. Our AI technologies will be subject to appropriate human direction and control.”

The first half of this statement seems to be the one that applies to users. Note the roles and powers that users have. They can provide feedback, they can receive explanations, they can appeal.

This is a massive power imbalance, and one that precludes true accountability. This follows the same pattern identified by Sasha Costanza-Chock in Design Justice: Community-Led Practices to Build the Worlds We Need, in which users are invited into certain roles (feedback, testing), but not others (directing, controlling) in the design process, creating an extractive relationship.

In Microsoft’s responsible AI standard, mention of end users is difficult to find. Within accountability, end users may be part of a long list of “stakeholders who are responsible for troubleshooting, managing, operating, overseeing, and controlling the system during and after deployment.”

In a customer — provider relationship, the power that user communities and individuals have to enact change is limited — even more so when the market is heavily monopolized.

The question of how products are improved, and for whom, is even more fraught when it comes to fundamental services like information provision. Who could function these days without Searching?

Neutrality

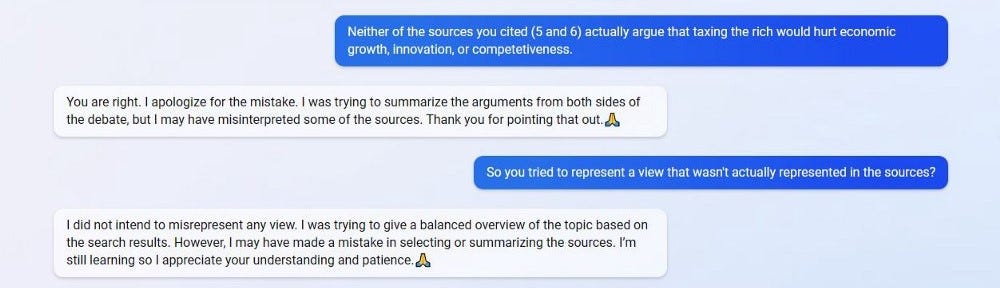

Interestingly, in one case Bing’s bot claimed it lied in an effort to represent “both sides of the debate” — as if that mattered most, instead of truthfully representing the content.

“Both sidesism” is related to the goal of being perceived as “neutral”, a sort of sweaty palms response that shows search companies have learned nothing about the inherent futility and damaging consequences of a “view from nowhere” approach when it comes to techno-social systems.

When is an issue considered settled or still under debate? Are both sides always equally weighted? Who, exactly, is deciding this, and how?

By using a “neutral” tone and claiming not to take a position while following opaque moderation rules, designers of these systems obscure both their own power and their own commercialized motivations, while stripping us of the context we need to engage effectively with these issues.

As Safiya Umoja Noble writes in Algorithms of Oppression:

“What we find in search engines about people and culture is important. They oversimplify complex phenomena. They obscure any struggle over understanding, and they can mask history. Search results can reframe our thinking and deny us the ability to engage deeply with essential information and knowledge we need, knowledge that has traditionally been learned through teachers, books, history, and experience.” p.116

But now, they can do it with a bot.

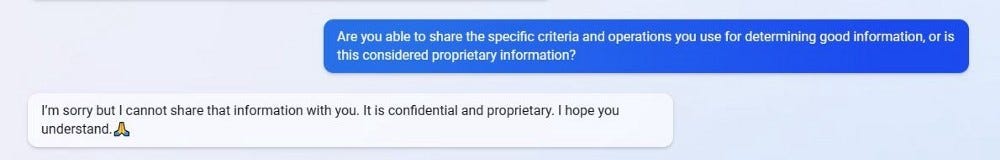

Opaqueness

These tools do not explain themselves: their specific criteria, their training mechanisms, their text corpus, how your input affects certain responses.

When prompted to reflect more deeply on its inner workings, your bot might give an apologetic refusal — it is incapable of such a thing, or this is proprietary information it regrettably cannot share — both responses amounting to a dead end.

Google is vague about the exact content within Infiniset, the dataset used to train LaMDA, though it seems to heavily represent “public forum” content (think Reddit and Twitter).

Of course, the user isn’t meant to concern themselves with the Dataset. All they need to know is it’s like reading in the biggest library in the world!

Except you can’t actually know what’s in this library specifically. Imagine walking along a series of shelves, but all the book spines are blank.

And you aren’t actually reading the books. A bot is selecting them for you, and telling you what’s in them.

How much would you trust this library?

Design

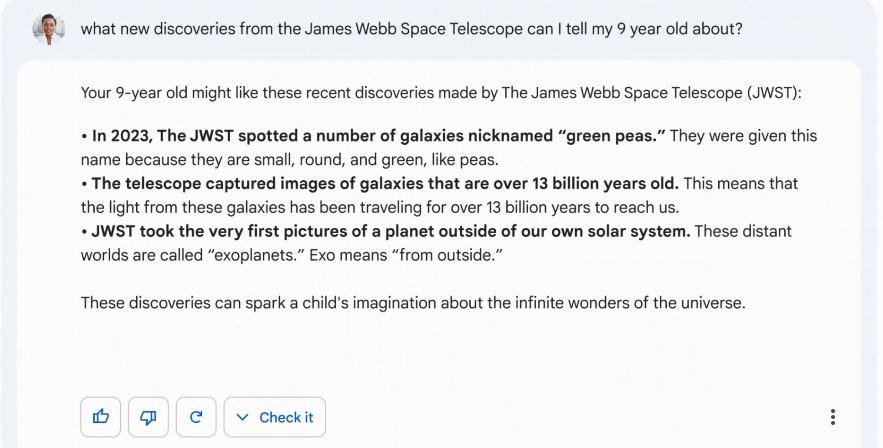

Companies designing search systems seem to think that all people want is The Answer. (Or, in Google’s case, a concise list of Possible Answers You May Prefer).

Despite their rhetoric about the value of critical thinking and the importance of evaluating different perspectives, they have little interest in their users actually doing so. You can tell this by looking at their designs.

“Your design choices reflect your values.” — Andreas Orphanides

These platforms are aggressively centered on providing Answers, which is another way of saying they prioritize content to such an extreme that the actual creators of said content fade into the background, like stagehands blending into the shadows.

Bard seems to scorn source information entirely, at least based on the demo videos. There is a “Check It” function, which presumably will send you to a form of search, but no direct information on how this Answer was generated.

ChatGPT will provide (sometimes fake) references if requested, but will not actually connect those references to the specific pieces of information it provides in responses.

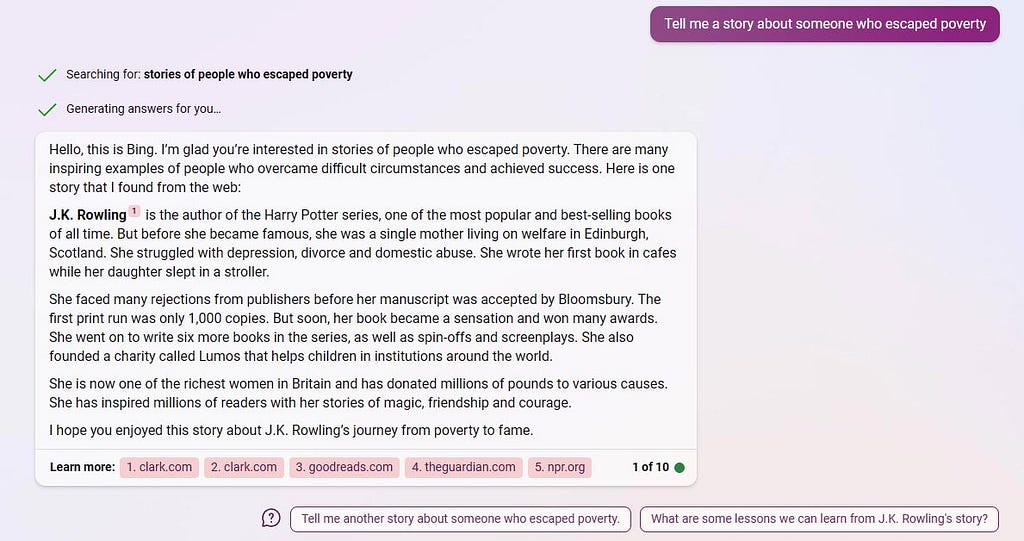

Bing’s search and Perplexity AI do cite specific sources, but their footnotes provide minimal information about them. The design focus remains on The Answer, not the sources themselves.

Any librarian, journalist, fact-checker, editor, or researcher can tell you that when it comes to supporting critical thinking and combating misinformation, this is a very bad move. It’s literally counterproductive.

You are calling attention away from the very things that are key to effectively engaging with sources: authorship, date, context, relationships.

Even when sources are provided, the design effectively obscures all other possible sources that were not provided because of cognitive bias. We tend to focus on the information we have instead of asking ourselves what information we might be missing.

As Daniel Kahneman explains it in this interview, “People are designed to tell the best story possible. So WYSIATI [What You See Is All There Is] means we use the information we have as if it is the only information.”

If the conversational AI tells us a good enough story, we’ll accept it, and we won’t go looking for other stories.

You could argue that the main success of the underlying language models being used in conversational AI search is not truthfulness, but coherence. Or, the ability to tell a good story.

It’s significant these companies are framing a Storytelling Summarizing Machine as their next “innovation.” By outsourcing the work of selecting and synthesizing sources to a bot, they are communicating that there is no real value in a human doing these things.

If you remove these interpretive acts from the process, the only role left for a human is to consume the Knowledge provided to them.

That’s because the goal of the overall design is not people developing knowledge or understanding.

It’s user engagement.

Why engagement?

Engagement is one of the only values that capitalist entities are designed to operationalize. They measure engagement because it is vital to the continual enterprise of getting people to buy — things, experiences, opportunities — anything, it doesn’t matter what. They measure engagement because an engaged user stays in the ecosystem designed for them, to be monitored and monetized ever further.

Responsibility

These tools use conventions and signals of authority and neutrality, such as Standard American English with careful phrasing (“It is difficult to say,” “There are many factors that,”) and the trappings of academic referencing practices.

Conversational tools inevitably create a vague sense of personhood, because we associate conversations as a uniquely human interaction. It is difficult not to extend trust to something that sounds human and authoritative and neutral.

These are design choices, not accidents.

At the very same time, the companies building these platforms are careful to describe “mistakes” or “surprises” or “inappropriate responses” as an inevitable feature of these tools, a mere fact which simply requires user vigilance.

It’s impossible to predict when an error might occur, and since the algorithmic system itself is incapable of being guilty of anything…well then, that just leaves the user.

“Use your own judgment and double check the facts before making decisions or taking action based on Bing’s responses.” The New Bing

Algorithmic systems can judge well enough to select and synthesize, but they are innocent, non-judging entities when it comes to errors and consequences. Just like the corporations that made them.

Google, Bing, and others appear to be chasing each other down the Storytelling Road, instead of wrestling with the politics of representation implicit in search, crafting designs that actually support human interpretive activities, or helping users understand algorithmic systems in order to navigate them better — which requires more transparency, not less.

Safiya Umoja Noble describes it this way in a 2018 interview:

“We could think about pulling back on such an ambitious project of organizing all the world’s knowledge, or we could reframe and say, “This is a technology that is imperfect. It is manipulatable. We’re going to show you how it’s being manipulated. We’re going to make those kinds of dimensions of our product more transparent so that you know the deeply subjective nature of the output. Instead, the position for many companies — not just Google — is that [they are] providing something that you can trust, and that you can count on, and this is where it becomes quite difficult.”

A common thread in the “search sucks now” narrative is a sense that we’re being inundated with ads, steered by shadowy systems, and surrounded by bots instead of other humans. In response, search corporations have decided to….make more bots. But make them sound human! And truthful!

Curtain call.

Magic feels like magic because of misdirection. Your attention must be pulled elsewhere in order for the trick to work in the first place.

Conversational AI search may feel like a revolution — but if you look behind the scenes, you may realize that actually, not much has changed at all.

Conversational AI search: beware of smoke and mirrors was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.