Guidelines and strategies for meaningfully leveraging AI in your applications

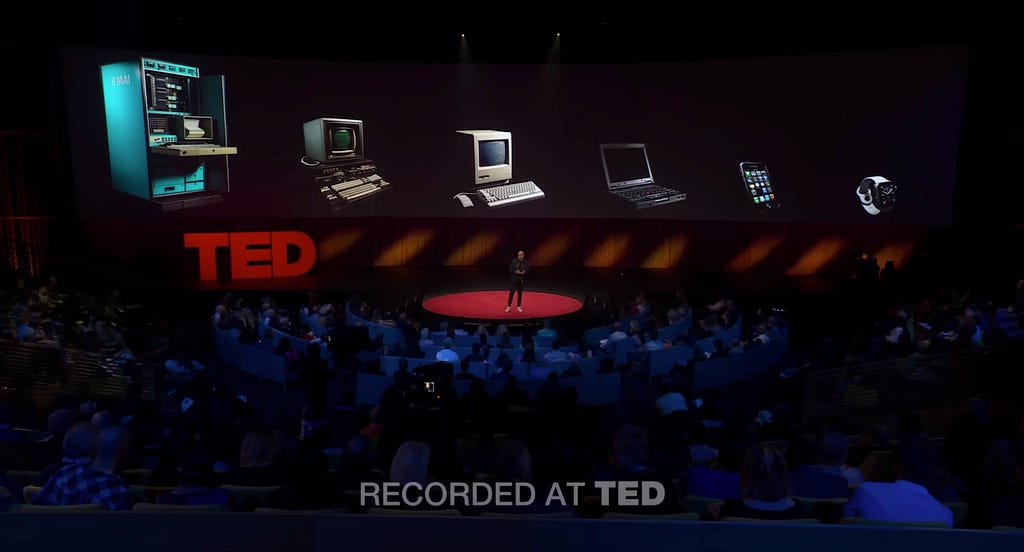

With the recent advancements in AI, we as designers, builders, and creators, face big questions about the future of applications and how people will interact with digital experiences.

Generative AI has unleashed huge possibilities with what we can do with AI. People are now using it to write articles, generate marketing and customer outreach materials, build teaching assistants, summarize large amounts of information, generate insights, etc.

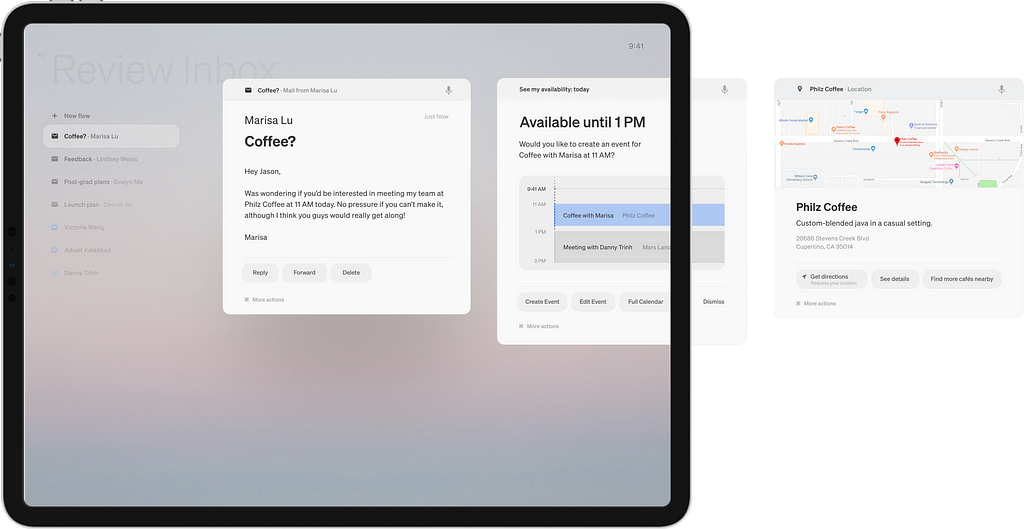

Given the lightning pace of innovation in this space, it’s not hard to imagine a future where instead of switching between various apps, users may interact with a central LLM operating system that acts as an orchestrator, where experiences will be much more ephemeral, personalized and contextual in nature. The Mercury OS concept is a sneak peek into this possible future.

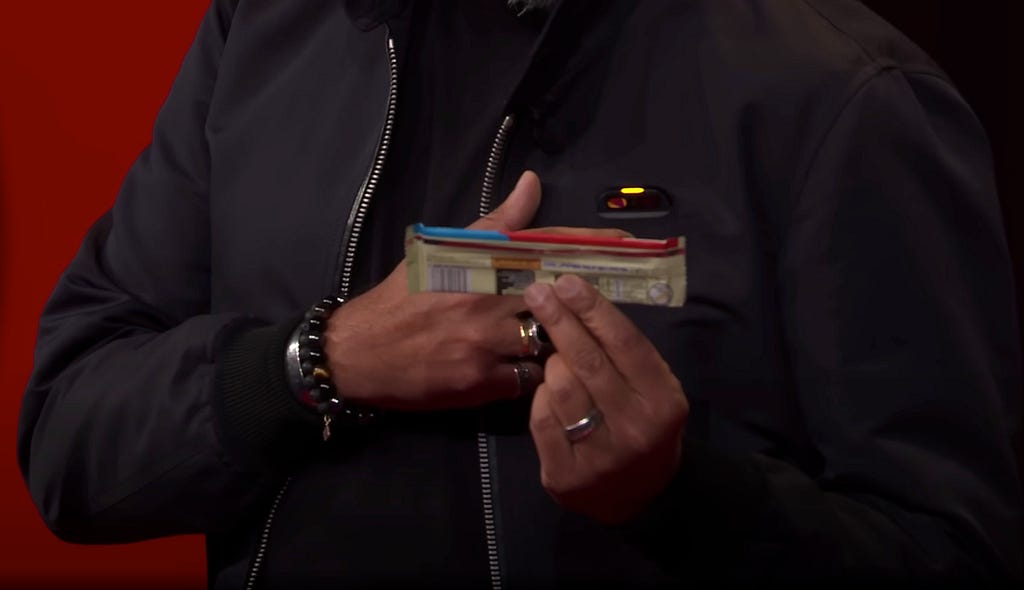

Furthermore, we can anticipate the rise of multimodal experiences, including voice, gesture interfaces, and holographic interfaces, which will make technology more ubiquitous in our lives. Imran Chaudhri from HumaneAI recently demoed a possible screen-less future where humans interact with computers through natural language.

The possibilities for how people will interact with technology and AI in the coming years are boundless and exciting.

But coming back to the reality of the current state of AI — most businesses are still trying to figure out the best way to leverage this technology to provide value for their customers and are playing with ideas around how to explore their first integrations.

Unfortunately, I see many products simply adding a free-form AI chat interface in their applications, with the hopes that people will be evoking the assistant when they need it, asking a question and hopefully getting a good response. However, it still puts the onus on the user to switch their context, draft up a good prompt and figure out how to use the generated response (if useful) in their work.

However, there are still a lot of unexplored territories where AI can be helpful in meaningful ways in the current state of the world. It involves going deeper into our user’s problems, understanding the job they are trying to do, and having a keen awareness of the current possibilities and limitations of AI.

Having designed for machine learning experiences for some time now, I’ve had the opportunity to gather some strategies and best practices for meaningfully trying to integrate AI into user workflows. My hope is that these strategies are useful for designers and product folks as they think about accelerating their user's workflows with AI.

#1 Identifying the right use cases and user value

Before taking on an AI project, it’s important to take note that not every project needs AI and that AI should not be the answer to the value proposition you’re providing to your users. AI is merely a way to enhance your application’s value proposition by providing one or more of these benefits:

1) Reducing the time to task

2) Making the task easier

3) Personalizing the experience to an individual

It’s also important to have a lot of clarity on what are users trying to do with your product. What does the ideal experience look like for them? What are the current limiters to that ideal experience? You can then identify which parts of that journey can be enhanced with AI. Are there any parts that can be automated or made easier?

E.g. setting up automated alerts on a business dashboard, auto-categorization of emails into buckets, without having to set up rules in Gmail, and automatically detecting if there’s any suspicious activity by the home security cameras, are some good examples of where AI helps enhance the value proposition to the customer and makes their job easier.

#2 Providing contextual assistance

Since AI models can now understand language, context, and user patterns, they can be leveraged to offer users much more contextual suggestions, guidance, and recommendations.

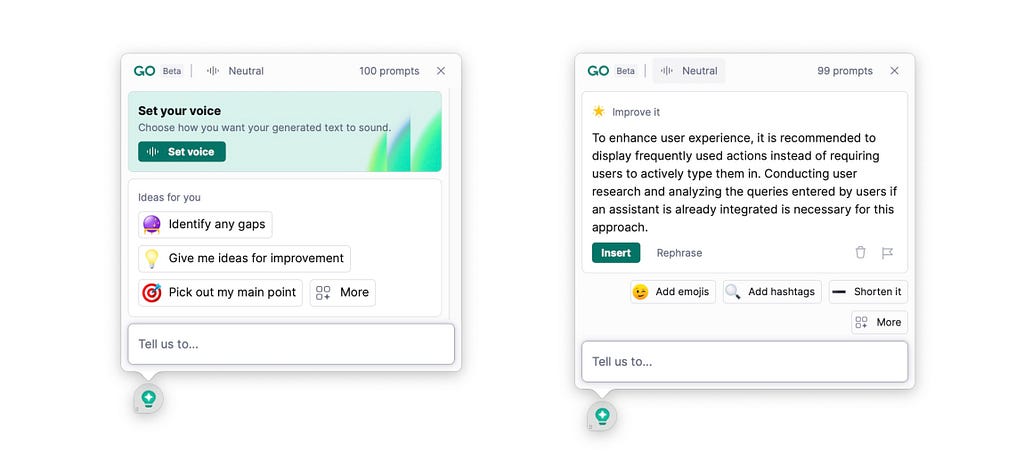

As an example, Grammarly Go does a good job of presenting relevant actions such as “shorten it”, “identify any gaps” etc. to users when they select a body of text. This is a great first step in providing contextual assistance.

But we also need to take this further and think about how could we make these suggestions even more personalized and relevant for users.

E.g. in this case, if the user was sending out an email to their entire leadership chain vs a peer, what would they want to keep in mind? If a user was writing a research paper for a journal, what more information would be useful to them? Or if a user is writing a piece for the NYT, what information would be useful?

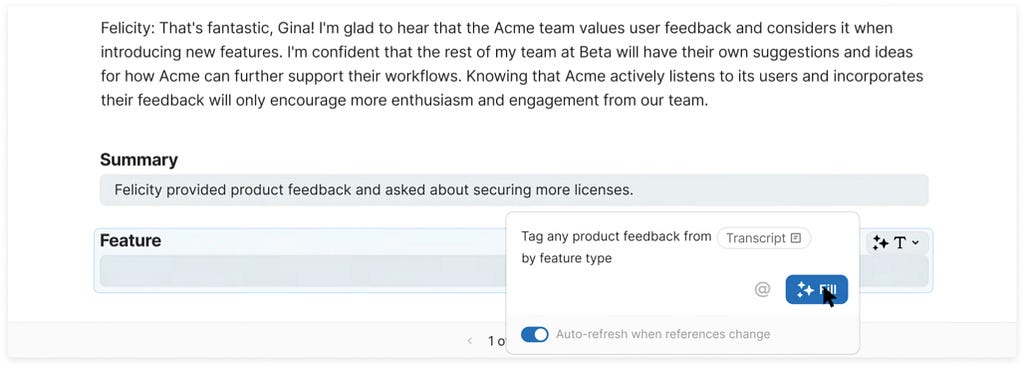

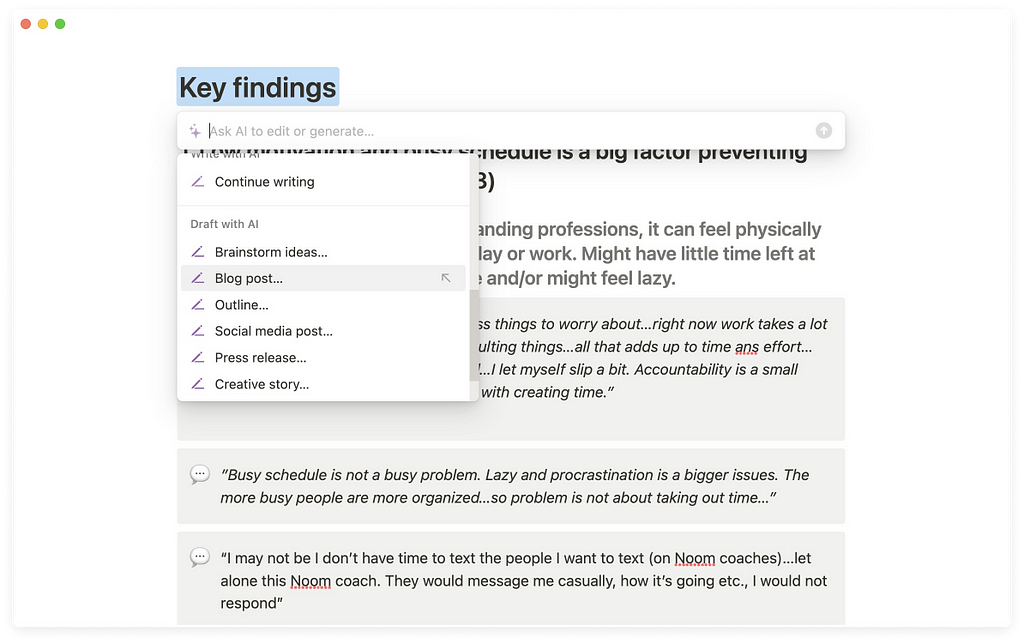

E.g. both Notion and Coda also do a good job of recommending common actions using AI in the flow of their work, without having to shift to different contexts altogether. It makes working with AI, feel like a part of the user’s natural workflow and nicely blends with the rest of the experience, without drawing too much attention to itself.

Another great example of contextual integration is Github Co-pilot which again fits into the natural workflow of writing code and adds accelerators for users without interrupting their workflow and having them shift their attention to another window/part of the application to get reasonable results.

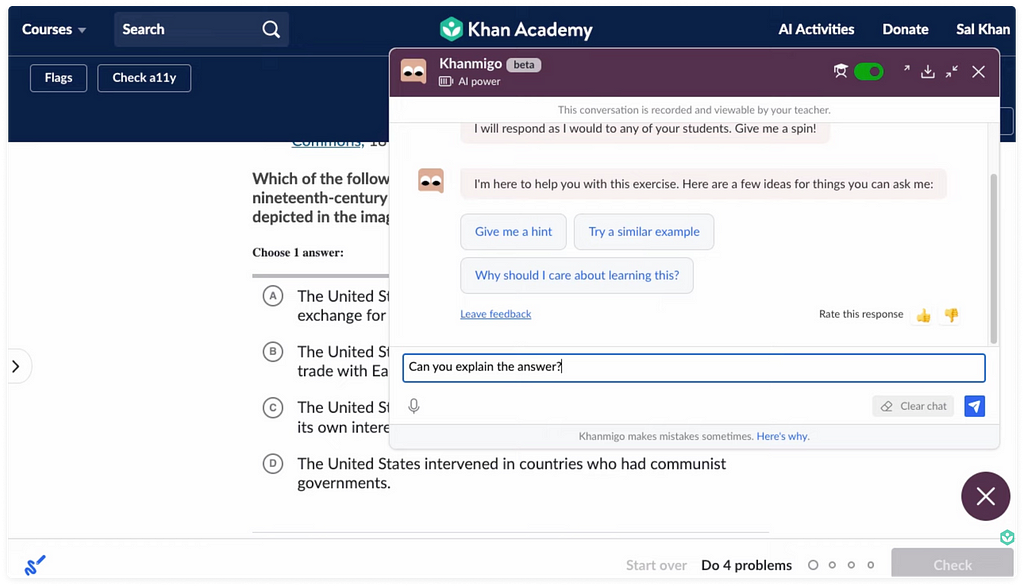

That being said, it’s important to also recognize the nature of assistance the user might require since not all experiences need to be fully contextual in nature. E.g. Khan Academy built out Khanmigo as an AI assistant for students to help them get unstuck and work as a teaching assistant being present in the background but available when you need it. In this case, a chatbot-like experience seems like a great start to help students, without interrupting their learning flow.

One thing to note when designing contextual experiences is that they are only useful if the AI model is aware of the user's current context and what they are working on right now or have previously worked on. Without this contextual understanding, we can only get so far in providing meaningful suggestions, recommendations, or guidance to the user.

#3 Optimizing for creativity and control

Another important thing to keep in mind when designing for creation experiences (i.e. when users are creating something like a blog, image, website, etc.) is considering the level of creativity and control users need, so they still feel control of the task the outcome.

While tools like Midjorney and Dall-E provide an incredible amount of creative expression to users, they can be limiting in terms of making edits to the generated image.

AI integrations for creation experiences should help users create a great starting point for their work, and give them all the tools they need to feel in control and make changes whenever needed. E.g. when creating a visualization using natural language, it’s important to optimize for creating a useful visualization by default, but also equally important to give users all the tools for editing/manipulating the visualization when they need it.

Recent releases such as Adobe Firefly, show a promising step in this direction where the tool still gives users control over the various aspects of the image to manipulate after the image is generated.

#4 Helping build good prompts

Another barrier people face to getting helpful responses and making the best use of LLMs and other natural language AI models, is figuring out the right prompts to use.

I see many posts and courses spring up on prompt engineering and “cheat sheets” on how to build out good prompts. There’s a need for education and awareness of what are the right ways to engage with these models to get better results, especially if the tasks are more specialized.

Zain Kahn on Twitter: "Everyone is talking about ChatGPT.But only 14% of people have used it (according to the latest survey by Pew Research).If you're one of the 86% who haven't used ChatGPT yet, here are 10 beginner prompts to help you get started: / Twitter"

Everyone is talking about ChatGPT.But only 14% of people have used it (according to the latest survey by Pew Research).If you're one of the 86% who haven't used ChatGPT yet, here are 10 beginner prompts to help you get started:

Some tools like Adobe Firefly present a great library of generated images and prompts when you first land on the tool. It encourages exploration of what’s possible and helps users get more ideas on building useful prompts.

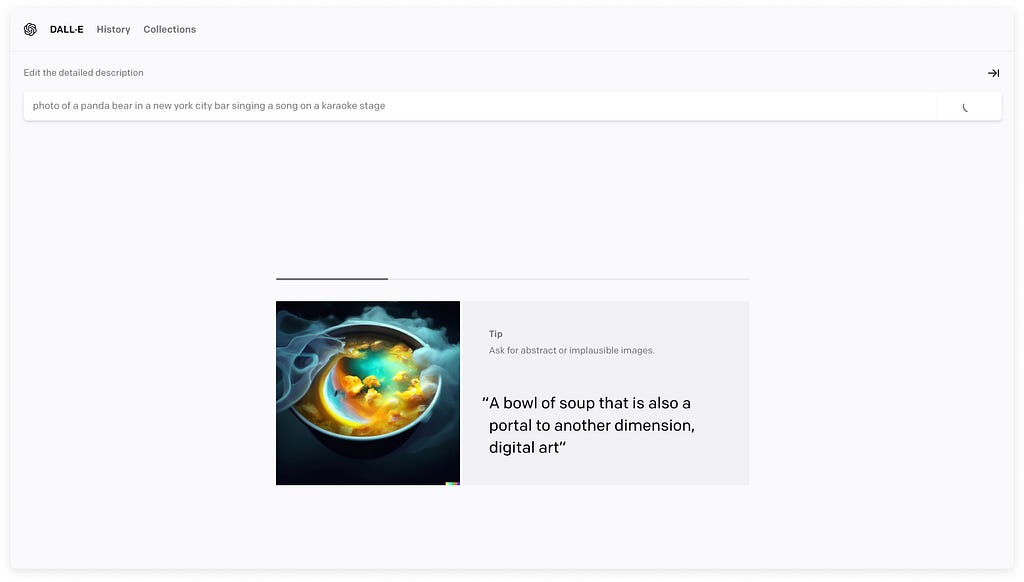

Instead of showing various examples upfront, you can also consider leading with just a few to help people get started and later showing tips or suggestions progressively. E.g. when working on generating an image, DALL-E presents some prompts and tips to users to encourage learning, while they’re waiting for the result to show up.

Notion too, gives suggestions to users on how they can leverage the contextual assistant for language tasks, which can help spark user’s creativity for creating good prompts.

#5 Focusing on getting quality results

It’s needless to say that an AI model is only so useful if it’s able to provide good and meaningful results to users. To achieve that, it’s important to train models on datasets that are close representations of the users' actual workflows. It’s also important that the training data covers a wide variety of use cases that are likely to occur in the real world and not just a few happy paths.

Designers can help product teams by not only creating prototypes and demos of what’s possible with AI but also being involved in the data conversations to help identity and sometimes even gather the right training data for that use case.

Designers can also help define what good quality results would look like for users which can influence the model development process. How to handle cases when bad results are generated? And the types of feedback mechanisms that need to be built to understand the model performance and for improving it over time.

#6 Clarifying the use of AI and its limitations

The challenge with AI models like LLMs is that the results can seem so trustworthy, even though they might not always be accurate.

It’s really important to build various mechanisms to remind users of the limitations of these AI models, especially if these results could influence very important decisions for users. For E.g. interacting with AI-generated recommendations might have lower consequences in a user’s life compared to using AI to detect cancer from a medical examination result.

At a high level, AI will play a huge role in shaping the future of how people interact with technology. However, as more and more businesses continue to integrate AI into their businesses, it’s important to consider how AI can be seamlessly integrated into a user's workflow, rather than it being a separate agent, living in its own separate space.

While designing for AI was something that only a small number of design or product folks has the opportunity to work on previously, we’re now seeing an exponential rise of teams incorporating AI into their products. And so it’s become even more important for us to have conversations about how to build AI products responsibly.

This is certainly a rapidly evolving space and we’ll continue to discover more of these strategies and guidelines for meaningfully interacting with AI. I’d love to know your thoughts and any other examples or guidelines that would be useful to append to this list.

If you’re interested in exploring this topic in more detail, here are some resources for further reading:

- “How to not add AI to your product” — a startup’s journey of trying to integrate AI into an online form builder.

- “Decoding The Future: The Evolution Of Intelligent Interfaces” — an article by Rachel Kobetz on the future of digital experiences with AI.

- “The Essential Guide to Creating an AI Product” — a comprehensive guide by Rahul Parundekar on creating an AI product, all the way from planning to implementation.

- “Language Model Sketchbook, or Why I Hate Chatbots” — A brilliant collection of ideas by Maggie Appleton on approaches to explore with LLMs.

- “What is the role of an AI Designer ”— article by Amanda Linden on how designers will play a role in AI design

- The People + AI guidebook by the People+AI Research (PAIR) team at Google

Designing for AI: beyond the chatbot was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.