The case for authenticating humans, reclaiming our identities, and defending against the coming swarm of bots.

It’s 2022 and we are living in the opening scene of a dystopian Black Mirror episode.

The line between what is real and what is fake has already blurred. The line between who is real and who is fake is now rightfully getting much more attention. It’s all over the daily news:

- Elon Musk claims more than 20% of Twitter accounts are bots but no one seems to actually have a real number — not even Twitter themselves (although they claim it’s less than 5%).

- A Google engineer was put on leave for suggesting they’ve developed a chatbot that is sentient (can think for itself)

- ABBA now performs live on stage (as avatars) to the delight of throngs of fans

Sure, while it’s still easy to tell it is not actually Björn shimmying on stage, it is becoming increasingly impossible to tell the humans from the robots. I mean, what will happen once machines become better at us at correctly identifying the three images of the 9-square grid image that contain traffic lights? Then what?

As actual humans, we better protect ourselves before the bots learn how to optimize for their own rights and self-interest.

Man vs. Machine

Whether bluffing in an online poker table, taking on a troll in an online forum, or chasing an avatar on your Peloton, I think we have a right to know with certainty if our adversaries are man or machine.

Where is the line?

Bots beings bots is no issue. It goes wrong when bots masquerade as human life forms. It’s iffy, but still not too creepy, when an artificially intelligent chatbot answers basic customer support questions. But when algorithms deliberately misrepresent themselves as an actual human, without disclosure — this crosses a bright line.

Consider that researchers at UC Berkeley and Lancaster University have learned that fake human faces can be reliably constructed to be seen as more trustworthy than actual faces.

As humans, we are heavily influenced by “social proof” (hat tip AileenLee) — the intuitive behavior that when we see others doing or saying something, we give it (sometimes unwarrented) credence — by outsourcing the due-diligence to others.

Social proof is powerful, and explains why Brits love to join a queue, even when they have no idea what it’s for.

https://medium.com/media/925e02b819f94b8b06194ed65aa3a9f6/href

Social proof is why eBay auctions with more bids attract new bidders (start your auctions at $0.01, people), why startups with existing investors more easily get follow on investors, and so on.

Social proof works well when the proof is from real humans. But it is a social disaster when huge numbers of bots parrot a false narrative to manufacture artificial confidence by manipulating our instinct to trust the wisdom of crowds.

If so many people are saying so? It must be true.

In March, the BBC reported a discovery that 75 Twitter accounts managed by the Kremlin use a so-called “astroturfing” playbook where legions of bot accounts amplify constructed narratives through a coordinated network of millions of fake accounts retweeting fake news: “75 Russian government Twitter profiles which, in total, have more than 7 million followers. The accounts have received 30 million likes, been retweeted 36 million times and been replied to 4 million times.”

We need to know who is man and who is machine.

Let’s be realistic though. We can’t expect the bots to identify themselves as such. Instead, it will have to be the other way around: we must find a way for humans to authenticate as human, and demonstrate proof we are actually real.

Humans deserve privacy…

As a European resident, I benefit from the culture, policy, and technology that is invested into protecting personal privacy. Legislation like the EU’s 2018 General Data Protection Regulation (“GDPR”) protects consumers like me from unwarranted data collection and over-reaching tracking, and offers defense against a tsunami of invasions of personal privacy both online and off.

European legislators believe humans have a right to own their own privacy, and have even made law “the right to be forgotten” which provides humans a legal mechanism to be deleted from databases and delete all personally identifiable tracking data.

I agree with the principle that individuals should have full control over their personal identification and their own privacy — revealing what they choose to in exchange for convenience, personalization, or relevance.

…yet, anonymity is toxic

While humans have the right to protect personal privacy, we’ve also learned that humans acting anonymously creates toxicity in our lack of accountability. One need only browse social media to see trolls emboldened by their anonymity as they post from their parents’ basement. Newsweek describes anonymous trolling as the “online disinhibition effect.” Yet, online bullies so-often stand down and de-escalate in non-anonymous face-to-face interactions.

Anonymity in public forums (Reddit, Twitter, YouTube) has clearly accelerated social polarization where humans take extreme positions in order to maximize attention, earn social currency, and ultimately manipulate the social narrative. And, while we think anonymous humans act badly: the bots are much worse.

Online forums which encourage non-anonymous participation has the modulating effect: amplifying actual human voices, while sidelining and discrediting the anonymous fringe. Yelp, in it’s earliest days, deliberately seeded local communities with real locals, initially paying freelance writers to post real reviews in their own voices, using their actual first name and last initial. The subsequent community that joined, followed their lead, using their own personal names as their handle, and being thoughtful with a more nuanced critique. Twitter, Instagram, and other social media platforms also utilize authentication (example: blue tick on Twitter) to various effect — offering credibility to authenticated human contributors, which in turn counterbalances the hyperbolic content contributed by those who hide behind anonymity. Tl;dr — being non-anonymous results in more moderate behavior.

Technology is accelerating the problem

We didn’t use to worry too much about infiltration of fake humans into our lives. The technology wasn’t there, and our intrinsic ability to detect fakes was until now, mostly good enough. But, the technical barriers to creating unlimited fake human profiles online are gone. The cost to creating millions of fake digital profiles is negligible, while the possible destruction such profiles can cause has become suddenly unfathomable.

Skeptical? Look no further than the risk of even perceived voting fraud, let alone the devastation of actual voting fraud.

Bringing it all together.

We suddenly face a toxic cocktail concocted of very alarming ingredients:

- large numbers of bots already misrepresenting themselves as humans

- no way of differentiating between human and non-human accounts

- technology that makes the bots look more trustworthy than actual humans

- an innate human desire for personal privacy

- reliable devastation created by anonymity online

- accelerating speed of technology and artifical intelligence that is only blurring the lines ever faster.

This might not end well.

What to do?

At the very least, we must find a way to securely, privately, and non-anonymously create a trustworthy credential that authenticates each of us as human.

Ideally a non-anonymous human authentication with fully controllable individual privacy settings secured by a trusted neutral party.

Wait, what? There’s a lot to unpack here, so let’s take it one concept at a time.

“non-anonymous human authentication”

At financial institutions (banks) everywhere around the world, there are common and often legally required fraud-abatement practices called KYC (“know your customer”) whenever customers open new accounts. The process involves passports, drivers’ licenses, utility bills, birth certificates, and a combination of other official documentation assures the bank that (a) you are a real person, and (b) you are who you say you are. Banks require this authentication to manage their risk.

These same KYC principles are needed for online platforms and services.

Welcome to New Social Media Corp. To activate your account, either scan and submit four forms of ID, or simply authenticate with your HumanID.

“fully controllable individual privacy settings”

Consumers should only have to share and provide personal information that they choose to — and will generally want to share only as much as is needed, but not more.

Imagine a hierarchical graduated pyramid of personal information starting at the lowest level (“Level 0”) — which asserts you are an actual unique human being — gradually cascading to age, gender, nationality, home address, billing information, tax ID, medical conditions, all the way to (gasp!) genetic code (“Level 999”).

For example, upvoting posts on a public forums on a might only require “Level 0” authentication (twixbar2967 is an authenticated human being) while getting a quote for car insurance might require “Level 17” (fordfan4957 is 32-year-old male with a high school diploma, married, living in Ann Arbor, Michigan, one speeding ticket, two fender benders, 14 years of driving experience).

Fully controllable individual privacy settings means that while the data has been authenticated as real by the 3rd party, the access to the content is controlled by the individual who reveals it only for his/her personal benefit — and only at their own discretion.

“trusted neutral party”

This is perhaps the hardest piece of the puzzle to crack. Who on Earth is trusted enough to play this role? The big four accounting firms? The Swiss government? Big tech? None of the above?

This is where the power of blockchain technologies may really meet its promise — as a public and unalterable ledger can act as a single source of truth. Take away the need for a trusted 3rd party at all.

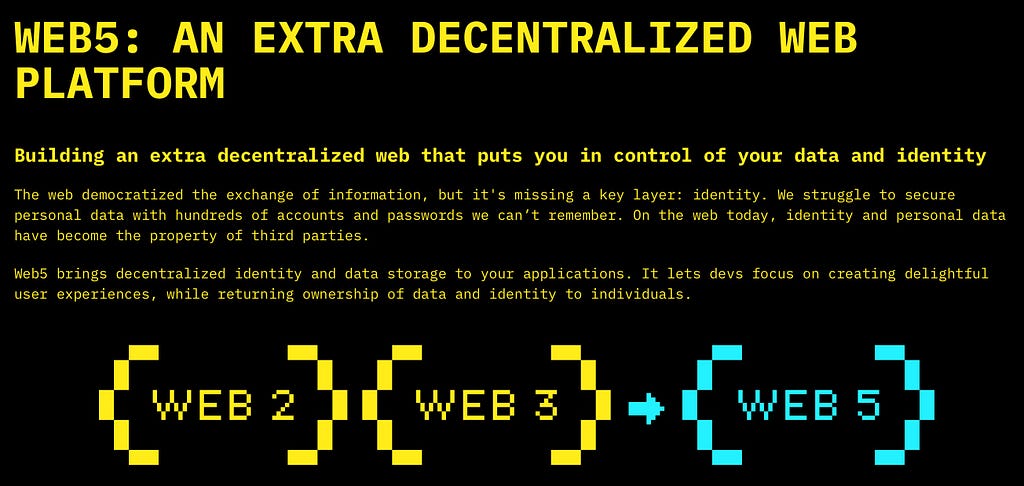

And just this week, tech pioneer Jack Dorsey (Twitter, Block) announced his so-called “web5” ambitions along exactly these lines.

The announcement website makes it plain: “The web democratized the exchange of information, but it’s missing a key layer: identity.”

I welcome such an unalterable record that can protect my rights as authenticated human over the rights of the non-authenticated, while protecting my personal privacy, and putting me back in control.

We need this.

There are so many doubts and questions. It’s really early to predict where this goes. But, the need is clear, and the technology exists.

What’s now needed is great execution of a set of neutrally governed systems which will truly puts the users (me and you) back in control of who we are and what we share.

I guess that makes me a human endorser of web5.

I’m not a robot.

Thank you for reading. I’d love to hear your thoughts. Is web5 the answer? Better ideas? Comments welcome: Humans only.

Follow me? Don’t really care if you’re a bot.

Where Everyone Belongs: America’s (Forgotten) Superpower

I’m not a robot (but, are you?) was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.