From bullsh*t generator to ultimate copilot.

Suddenly, news about Generative AI is everywhere. People talk about how ChatGPT reached 1 million users in a record of 5 days, how Goldman Sachs predicts that AI will impact 300 million jobs, and how the new Bing goes “unhinged”…

All the hypes aside, as someone who works on digital Assistant, with a background in Human-Computer Interaction, and a passion for designing for emerging technologies, this is an exciting moment for me personally.

The breakthrough in Generative AI is like the moment when we discovered electricity — it might still take years for things to mature (though it’s speeding up), but we know that we probably just landed on a major stepping stone that suddenly unlocks many possibilities, and everyone in the industry is trying to figure out what’s even possible.

Indeed, what is possible? And how do we best leverage the capabilities of Generative AI, and compensate for its drawbacks?

In this article, I’ll try to unpack my learnings as a designer after playing around with dozens of Generative AI tools. I’ll talk about the importance of good input for generative AI, the limits of languages as input, and how we could leverage design best practices to compensate for these limits, and make Generative AI useful for everyday users.

The false promise

We’ve seen an explosion of AIs that can generate languages, arts, music, entire presentations, code, even podcast interviews with public figures (think Steve Jobs?).

Looking at all these new capabilities that were somewhat unimaginable to the public a few months ago, it might be easy to bear the illusion that soon, we can just say a few words, and Generative AI will always work its magic to create an impeccable solution that represents exactly what we have in our mind.

That is, however, not the case.

Yes, if you want to create a logo for your small business, and you don’t want to hire a professional logo designer, you might be able to create a good enough one that does its job for you with a few prompts & iterations in Midjourney. But it only works when you want to be scrappy.

If you have specific requirements for how the logo should look and feel, however, you’ll probably need to polish your prompts many times to get it closer to what you have in mind, if at all.

That requires a lot of human supervision and back and forth editing, and cannot be done only in a few words.

The importance of input quality

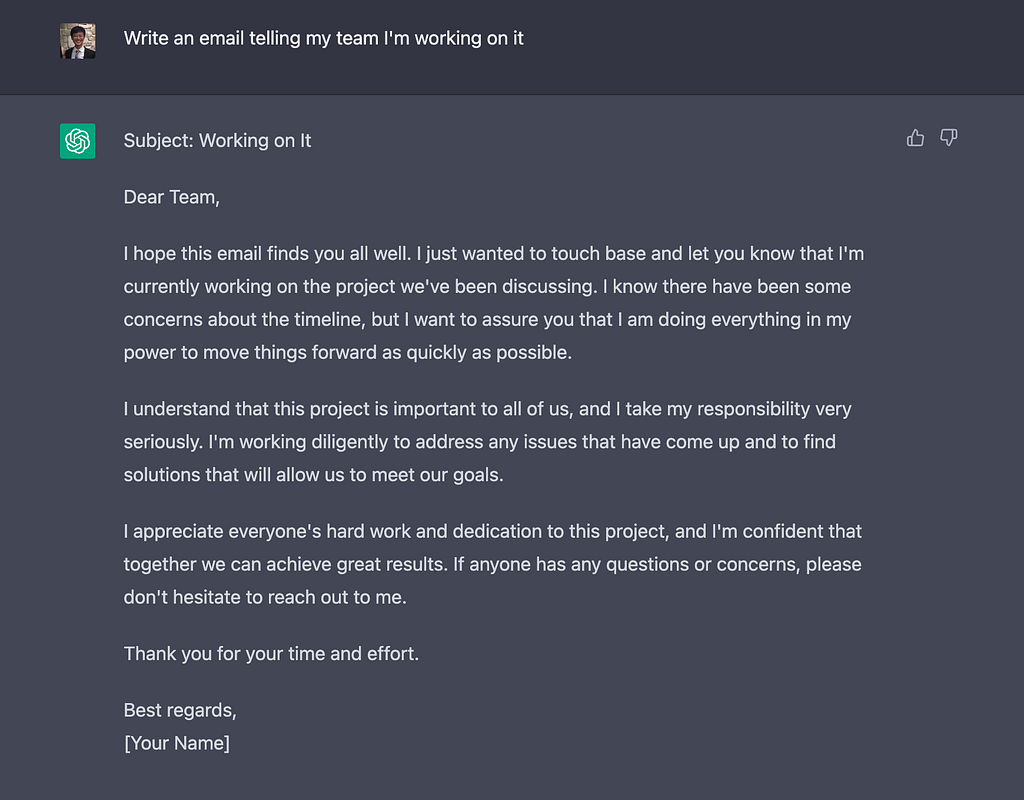

This is because of one of the most important rules, in my opinion, of using Generative AI — the input quality determines the output quality. The example below shows a generic prompt I gave to ChatGPT:

It’s actually funny how ChatGPT goes wild on imagination and tries its best to make things concrete, only to output an email full of cliche. It reminds me of the meme I saw on reddit:

It will be such an underwhelming use of AI as our bullsh*t generator and bullsh*t decoder.

And I don’t blame ChatGPT for this, since the prompt I gave is bad and doesn’t contain much information. I didn’t specify the style of the email, the length, the relationship between me and my team, any existing projects I’m referring to, etc. I might have all this context and info in my head, but ChatGPT will never know it if I didn’t say it all.

And that’s the challenging part — knowing how to convey just enough context so that using Generative AI actually saves my time and gives me the output I need.

The challenge of language

Knowing how to “engineer” the prompt to help convey our thoughts precisely to the AI models is hard. Our own languages, such as English, are inherently terrible at giving precise instructions. That’s one of the reasons we created programming languages with strict syntax in the first place to convey our thoughts unambiguously and efficiently to computers.

“The code is the interface that we designed to be able to program the computer. It’s what we need. It’s objective, explicit, unambiguous, (relatively) static, internally consistent, and robust.

English has none of these properties — it’s subjective, meaning is often implicit, and ambiguous, it’s always changing, contradictions appear, and its structure does not hold up to analysis.”

— Dawson Eliasen “English is a Terrible Programming Language”

For this reason, even human beings misunderstand each others daily. We might need to explain our thoughts various times, with a few back-and-forth iterations, for another person to fully grasp it. Often times we also need to resort to external artifacts, like whiteboard drawings, or very detailed documents explaining our entire thought process, to make that alignment.

We’ll need to do the same with AI.

Path to your ultimate collaborator & copilot

There are a few ways we can design and position AI tools to avoid these challenges. Instead of giving people the false hope that AI tools can just “read your mind”, we should talk about it more as a powerful collaborator, or as Microsoft puts it, a “copilot”, that can help you get things done. People who design and build the AI tools should also provide ways to help everyday users communicate with their “copilot” in a precise yet efficient way.

Here are a few things to keep in mind when you’re designing for generative AI.

1. Abstract away prompt engineering

Prompt engineering can be fun, and it has even become a job that can earn you $335,000 a year. However, I believe that these jobs only exist so that professional prompt engineers can do the hard work on behalf of everyday users, who don’t have to become experts in another language just for AIs.

Instead, we can let everyday users input & describe what they’re looking for through preset questions / categories. With that information, the system can generate a more polished prompt on behalf of them, to maximize the chance that the model can generate good results.

This is especially helpful for products that focus on a narrow domain, where we can establish a set of preset questions that’s helpful in most scenarios.

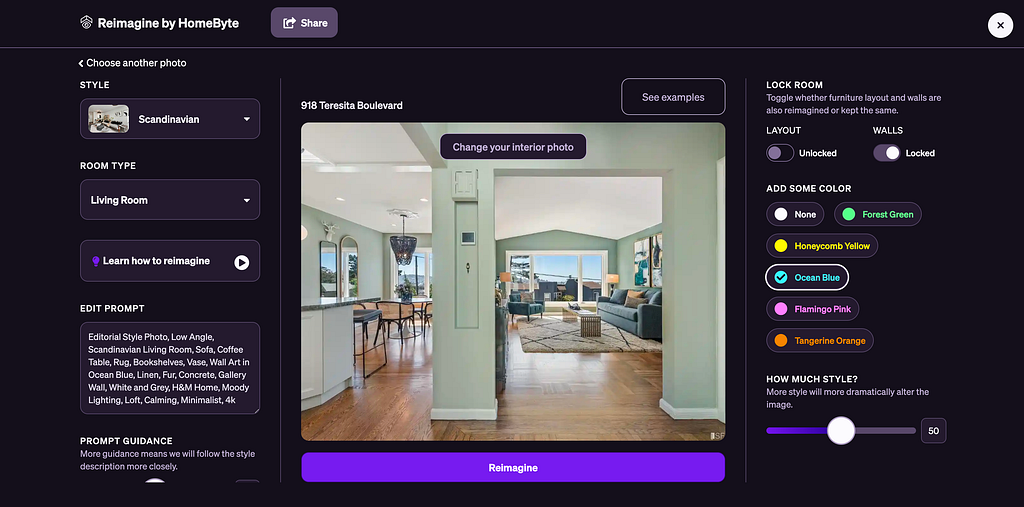

HomeByte, for example, lets users generate restyled images of homes for sale, to envision how it might look like with new furnitures and styles. It allows users to select a style, the room type, and color theme using established UI elements, which then generates the final prompt for the Generative AI model. It also exposes the prompt to the user, so that advanced users can fine-tune it themselves.

2. Provide scaffolds & constraints

This is an extension of the point above. Providing constraints is one of the seven design principles by Don Norman. It might sound counter-intuitive, but a lot of times, limiting user’s options, instead of giving them a blank page (in this case, generating their own prompt from scratch) might be more effective.

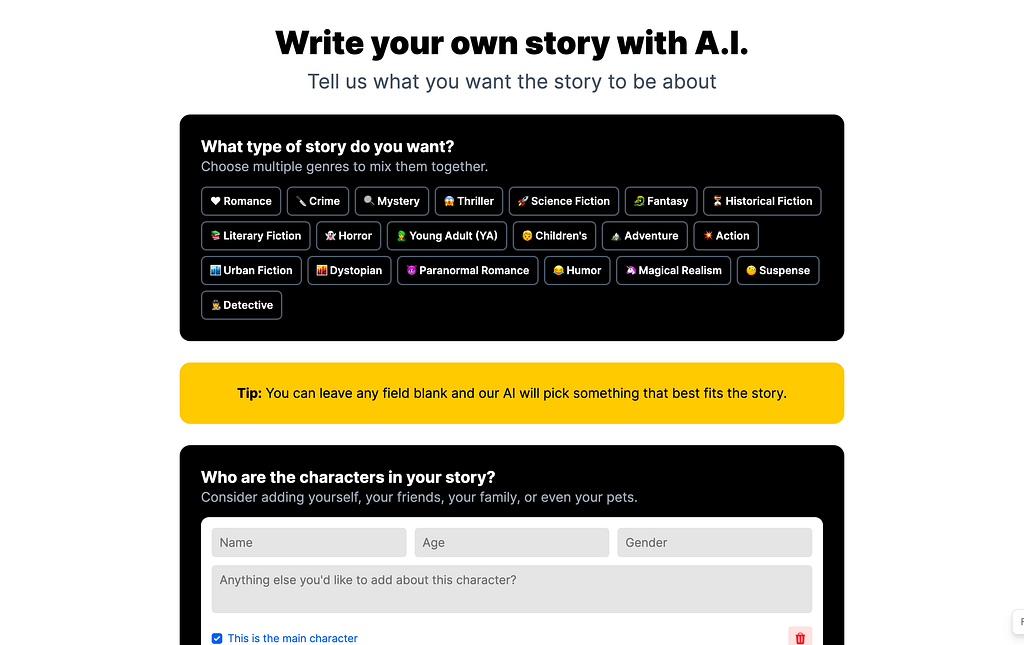

Story AI is a good example, where it asks users for a few inputs before generating story ideas for them. This includes the style of the story, the main character’s info, its setting, main conflicts, and additional special requests for the story.

These options nudge users to consider different aspects of their request, to make sure the input is robust enough for a high-quality output.

3. Leverage existing artifacts as input

Another way of providing detailed, high-quality input is to leverage existing artifacts as input, and let Generative AI refine it, style it, or convert it into a different format.

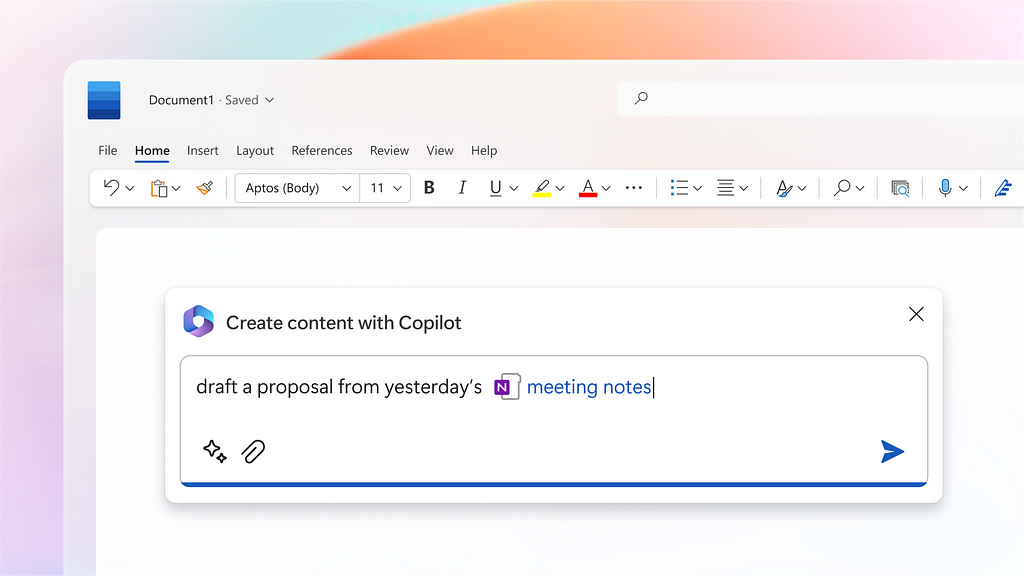

Both Google Workspace and Microsoft Office’s recent announcement videos illustrated this scenario.

For example, you can write a scrappy draft of an email on your phone, without needing to consider the format, and let Gmail formalize it for you.

Or take your raw meeting notes from yesterday, which captures all the ideas your team has, and convert it into a proposal in Microsoft Word.

This is, in my opinion, a great partnership between humans and AI, where human beings are still in charge of the content. After all, Generative AI is a reasoning engine, not a knowledge base. Without content to ground it, all it can do is hallucinate and fabricate. But with human beings providing the content, even in its raw form, AI can style it and make it awesome.

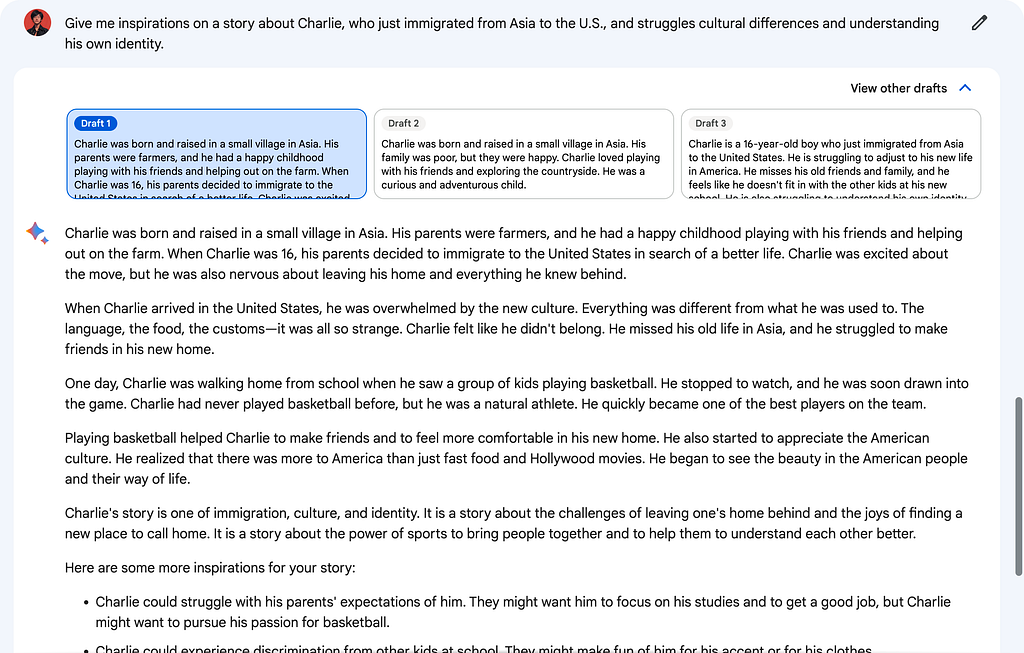

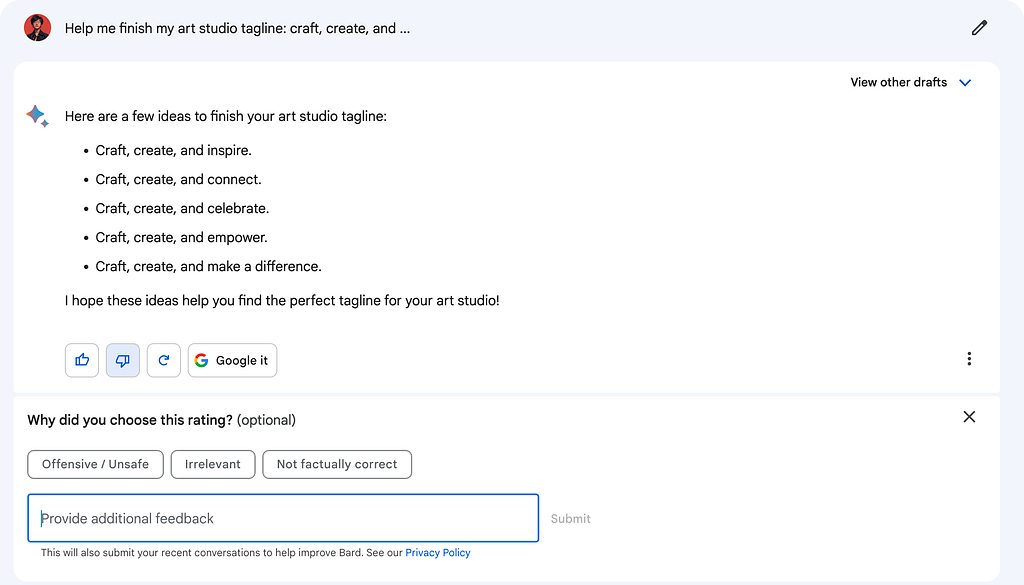

4. Quantity over quality

Even with constraints and good input, it might still be hard for the AI to fully capture the user’s intention on one try. Therefore, it might be helpful to design the AI tool so that it provides a few options, and let the user pick their favorite ones to further polish.

“Quantity over quality” is also one of the principles when it comes to brainstorming. Therefore, one of the good uses of Generative AI is to provide options to inspire the user, instead of creating the end solution in one shot. See it as a muse, not an oracle.

This is also where we can leverage the so-called “drawback” of AI models and transform it into an advantage: instead of expecting flawless execution of our ideas, which can be difficult due to the limits of languages, we can embrace the randomness AI models introduce. A “misinterpretation” of the AI model may lead to serendipitous discoveries, where the imperfect output expands our perspective and encourages us to explore new, uncharted paths.

5. Allow for user control & fine tuning

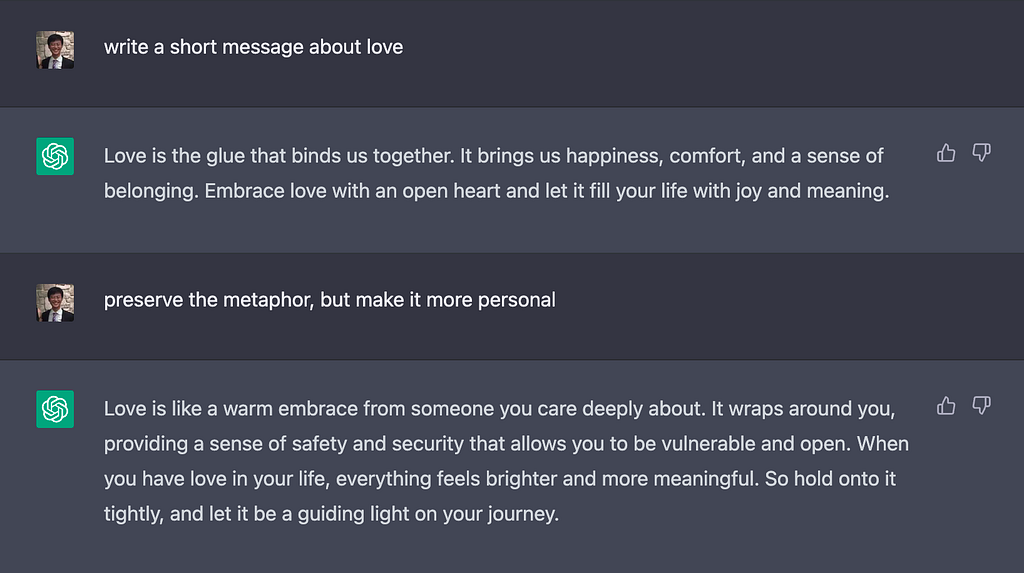

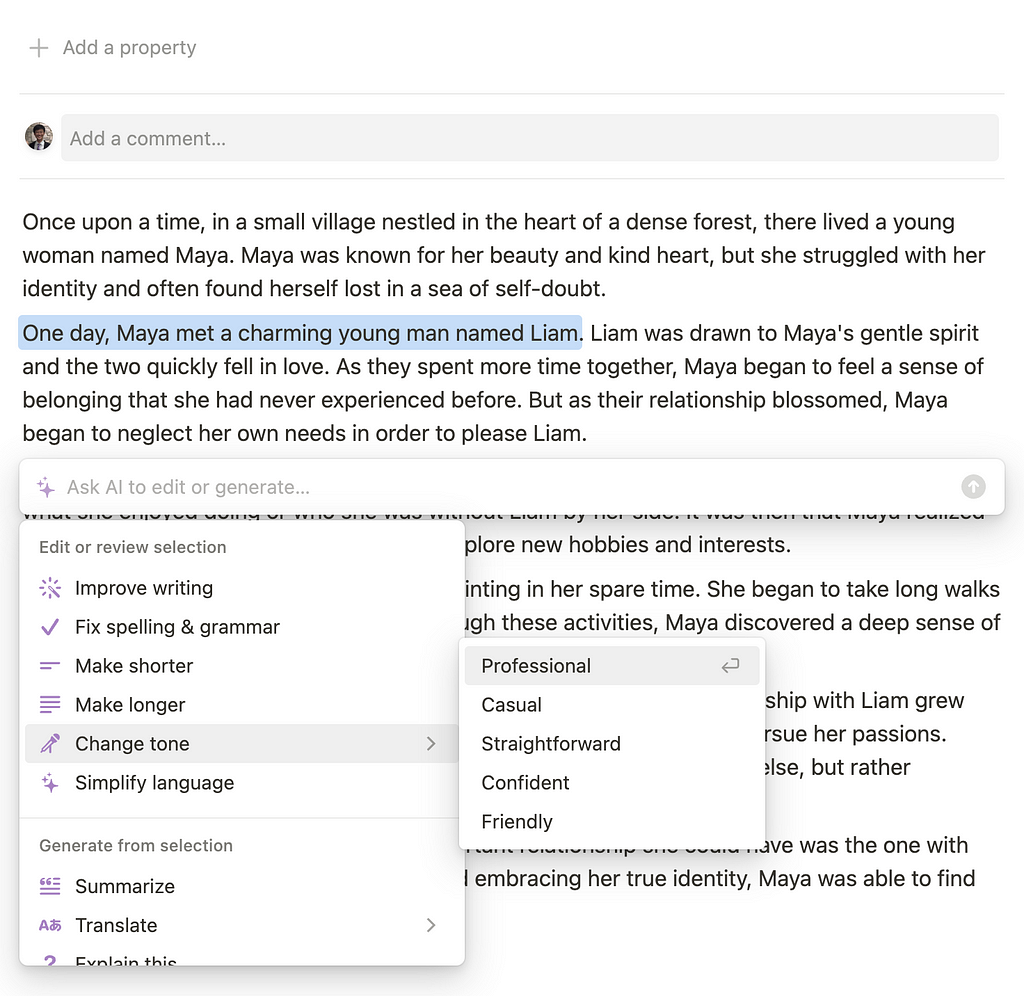

Even with multiple responses, AI’s generation is still usually a starting point. Ultimately, users will need the ability to control and fine tune the results (Control is also one of the 10 usability heuristics by Jakob Nielsen).

In ChatGPT, you can ask it to regenerate the response with new constraints. However, because of the probabilistic nature of generative models, and the lack of precision in languages, this can be challenging.

In the example above, ChatGPT might not have understood what I meant by “preserving the metaphor” (I was vague as well). It regenerated the whole thing after my 2nd prompt, whereas I just wanted to change the wording a little bit.

It’s hard to let the model regenerate again and again through prompt engineering alone, and this can lead to frustrating experiences where users finally give up after 21 iterations.

On the other hand though, we can design the tool to allow users to edit the response themselves, or easily select part of it, to isolate the need for change through direct manipulation, and even further refine it with AI’s help.

This can be easy for generated text, since they are readily editable. But early previews of Adobe Firefly shows that it’s also possible to isolate parts of an image, to allow users to fine tune the result based on their needs.

6. Learn from user habits & preferences

These back and forth edits are common even when we are working with other human beings, especially during early stages of collaboration. As time goes by, we’ll learn about each other’s work style, preferences, and establish common grounds more easily.

A well-designed AI system should be similar, learning about user’s habits & preferences through implicit & explicit input.

Current AI tools usually only allow users to provide feedback on the quality of the response, with the goal of improving the model themselves, not to further personalize the model for the user.

It might be quite expensive to create personal models for each user as of today, but in the future, we might start to see models that learn from its past interactions with users, and over time, learn the user’s style, and generate more personalized responses that satisfy user’s needs in fewer attempts.

Summary

Generative AI is hot, and the frenzy behind it might give people unrealistic expectations of what it can do. However, it’s important to remember that:

- For Generative AI, input quality determines output quality. When possible, provide users with options / constraints, and generate high-quality prompts on behalf of them. Leverage existing content generated by humans in raw form, and refine it with the superpower of AI.

- Language is imprecise, and generative AI models are probabilistic. When in doubt, provide multiple drafts for users to build on — more options might even inspire them in serendipitous ways. Allow users to edit the results through direct manipulation to compensate for the limits of languages.

- In the long-term, learn and adapt to user’s habits to make the collaboration more seamless.

Thanks for reading! There’s much more to Generative AI than what was covered in this article. In addition to generate and inspire, people also use it to gain insights, get advice, and even automate things! Stay tuned for more articles where I explore further topics in depth, and let me know your thoughts in comments!

If you enjoyed this article, consider following me on Medium, Twitter, and connect with me on LinkedIn!

I’m passionate about topics like designing for emerging technologies (AI, AR, Ambient Computing, etc.), systems thinking, creativity, business, and personal growth. Down to grab ☕️ and bounce ideas 💡.

How to design generative AI experiences to be truly helpful was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.