Now digital humans are here. They sound and look just like us.

Digital humans, AI-powered digital counterparts, are going to change how we interact with the world around us.

https://medium.com/media/5d057cb8920f355e7fa126d3cedcac2c/href

In 2016 Miquela Sousa, or @lilmiquela, quickly grew to be one of Instagram's top influencers. At a first glance, there is little wrong with the 19-year-old Brazilian-American model. She enjoys life with friends, goes on fancy holidays and speaks about her skin-care routine.

But once we look closer she does not fully look like us. She is a digital human, an AI-powered avatar that functions independently; She is not real. Miquela created a lot of backlash. Hundreds of others would have loved to have that job, but now they are giving it away to a computer.

It reminds me vaguely of the Black Mirror episode ‘Rachel, Jack and Ashley Too’. In that episode a digital twin of a famous pop artist decides to go in a creative direction, completely overshadowing her human counterpart. This fictional story is an illustration of how AI can turn rogue and create undesired situations.

Virtual rapper FN Meka dropped from Capitol Records

Last month such a story became reality, when digital human and rapper FN Meka became a hit on youtube. The rapper quickly gathered 1 billion views with his songs and became the first signed digital rapper at Capital Records.

But the rapper was dropped from the label amid growing backlash over racial stereotypes, including use of the N-word and an Instagram post of the rapper being beaten by a police officer in prison.

Capitol Records then severed its ties with the digital human and released a statement:

“We offer our deepest apologies to the Black community for our insensitivity in signing this project without asking enough questions about equity and the creative process behind it,” the statement read. “We thank those who have reached out to us with constructive feedback in the past couple of days — your input was invaluable as we came to the decision to end our association with the project.”

While we do have a certain influence over digital humans, they have a mind on their own. Undesirable situations are inherent to the uncontrolled nature of digital humans. But we could also see digital humans being used for the better. Digital humans could revolutionize entire industries.

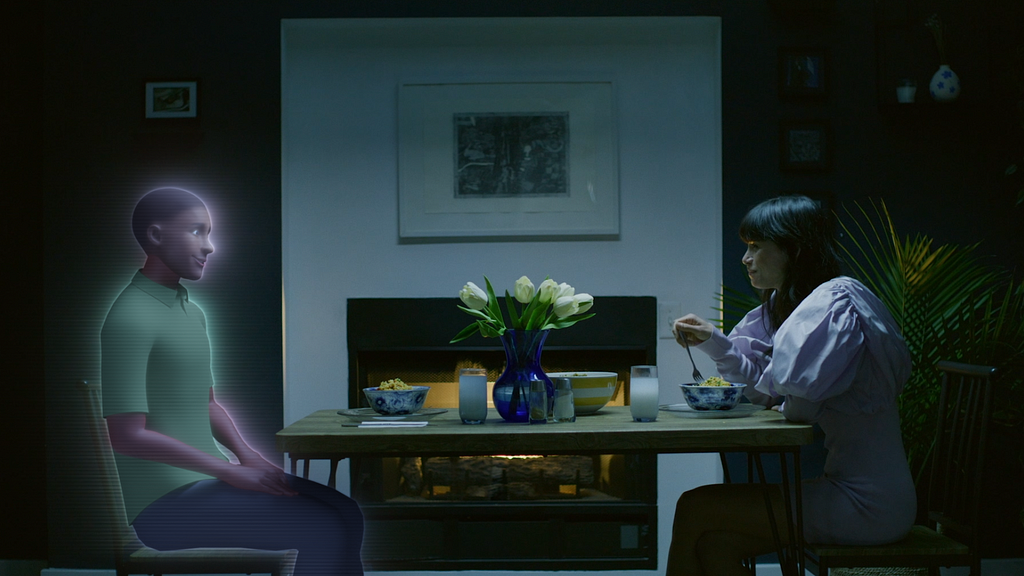

Replika’s digital friend trying to help against depression and anxiety

Replika is an example of a piece of software that might help us down the line. The company has engineered an ‘AI friend’ that aims to make people feel better. The goal of this AI friend is help against depression and anxiety.

Replika is a digital version of a pen pall. So far, the company has 10 million registered users and claims that “more than 85% of conversations make people feel better.”

Lolita the virtual agent hunting sexual predators

Another experiment was engineered in Spain in 2013. A virtual chat agent named Negobot, referred to as Lolita, posed to be a young female to track potential sexual predators online.

Lolita turned out to be quite successful. The virtual agent was able to mimic subtle human movements to such an extend that the project identified over 1000 possible sexual predators.

What role will digital humans play in design?

I think that digital humans could, with precaution, revolutionize the way we design human-machine interfaces. A human-tone can go a long way, especially in emotionally-loaded contexts.

The first obvious use case for digital humans lies in customer care. From a strategic standpoint designers could design services by implementing digital humans. As Computecoin put it:

“Digital humans, as their name suggests, require zero sleep and no wages.” — Computecoin on digital humans

A digital human has many upsides — They serve as a “digital workforce” (Creg Cross about their digital humans, CEO of Soul Machines) that is easily scaled, knows 72 languages, and are available 24 hours. They can access the internet, leveraging real-time data. And most importantly: They work at a fraction of the costs of a fully-human customer care unit.

The strategic upside is clear. But there is another aspect to the technology that might be even more interesting, the user experience. We could design new interactions with machines that revolutionize human-machine interaction.

The human-centric aspect of user experience design is where digital humans shine. In situations where emotions play a role, a human touch is maybe the most important part of any interaction. Digital humans can serve that purpose and leave us design space.

We can design how digital humans are supposed to react by instructing them about our expectations. We can design an interaction through these instructions. Do you want an amicable, friendly tone? Maybe a more pressing tone of voice? The AI can get that done.

A great example I could see happening is the introduction of digital humans in emergency dispatchment. The design proposed by Industrial Designer Alec Momont introduces a clever system of emergency drones, which could reach emergency situations faster than any ambulance can. The drone will then connect you to a doctor, who can help you from a distance. Through cameras placed in the drone, the doctor is able to assess the situation.

I could see this doctor being replaced with a digital human. The human touch here is important: It can help calm the patient and bystanders, essential for any emergency situation.

But the real power is in the internet of things. A digital human could take in data, maybe through a blood sample, process it in real time, and offer better medical care than any human could have.

Digital humans as a time saver in UX research

Another great way to leverage digital humans, especially important for designers, is in user research. The repetitive task of user research could be automated with digital humans, who can ask the right questions and connect the dots for us. We can already see these tools being developed right now.

The power of digital humans here is that the AI could gather huge datasets and distill them into user insights. I am building a tool for UX designers to automatically transcribe and gain insights using AI technology. The goal is to empower designers by automating the long process of user research, so that designers can focus on the actual designing. Digital humans will be able to use those datasets for precise questions, leaving us designers with more time for design.

Should we want digital humans by our side?

In the future, we might see such digital humans become more and more present. We could imagine a world where customer service calls are being handled by friendly, human-like AI. But we could also see that technology has reach far beyond that. Interactions in need of a human tone, such as with emergency dispatchers, might be replaced by a digital counterpart.

When designing the future of digital twins, we should consider the option of digital twins. Can and should we want a human touch? Can we leverage (real-time) data? Then maybe think about a digital twin.

But we should question ourselves, giving the uncontrollable nature of some artificial intelligence if we want these things to be true. Stories such as FN Meka have proven that digital humans can move unexpectedly. We are trusting AI for non-critical tasks now, but what if we take them one step further?

Are you interested to read further into these topics? Here are some interesting links about digital humans and design:

- Handbook of virtual humans on the use cases of digital humans

- Virtual Humans: Today and Tomorrow on the role of virtual humans in the wider role of artificial intelligence

- Podcast by In Machines We Trust

- Digital human developed by Deloitte

- The rise of digital humans in China

- Bruce Willis has a digital twin

- UneeQ is leveraging digital humans for design

Digital humans are here. They sound and look just like us. was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.