As the GenAI hype dust settles — it’s time to start thinking about usability and the opportunity it presents to address declining engagement and retention.

GenAI represents a new paradigm in User Interface Design and a radical shift from Command-Based Interaction Models and Graphical User Interfaces which have dominated the last 50 years of computing.

It’s strange to think that almost overnight, prompting joined clicking and scrolling as a primary means of interacting with machines.

However, while GenAI tools like ChatGPT, Google’s Bard, Midjourney and Dall-E 2 have served as a catalyst for more conversational and natural interactions between humans and machines, they are by no means the end-state.

Today we have the privilege of witnessing these tools evolve through their ‘awkward adolescence’ as they encounter a range of challenges impacting adoption and scale — from use cases without compelling value propositions to data and privacy concerns, hallucinations, limited traceability and reproducibility of outputs.

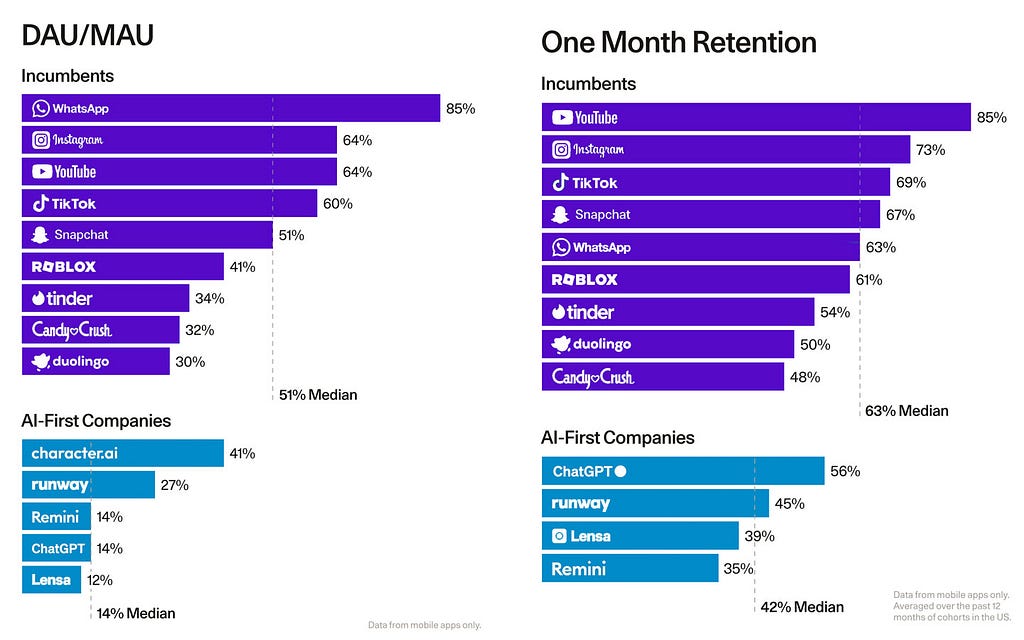

Research by VC firm Sequoia highlights the challenges AI-first companies face relative to tech incumbents as they tackle declining engagement and retention. Leading consumer applications have an average daily active user rate of 63%, with an average monthly retention of 51%. In contrast, AI-first companies lag behind, with only 14% of their users active daily and a monthly retention rate of 42%.

Solving this challenge is complex and will require Product teams to break through the hype and get back to basics across the entire development process. To achieve this, there needs to be a shift from technology to user-led thinking, a push to start tackling material problems and latent needs (as opposed to the view that everything can be solved through an AI co-pilot) and a laser focus on the user experience to break the confines of chat interfaces and rapidly improve usability.

AI’s usability problem

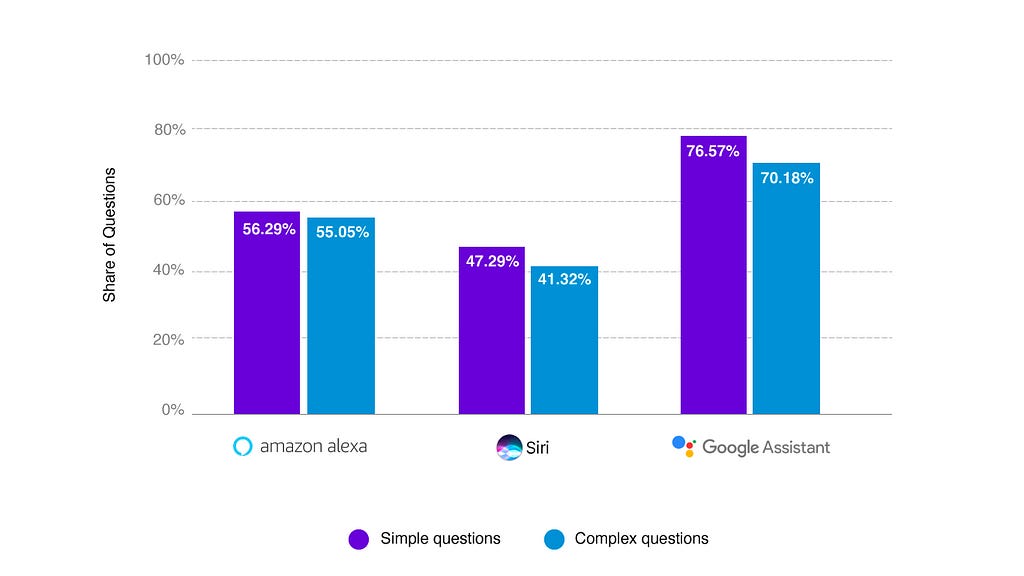

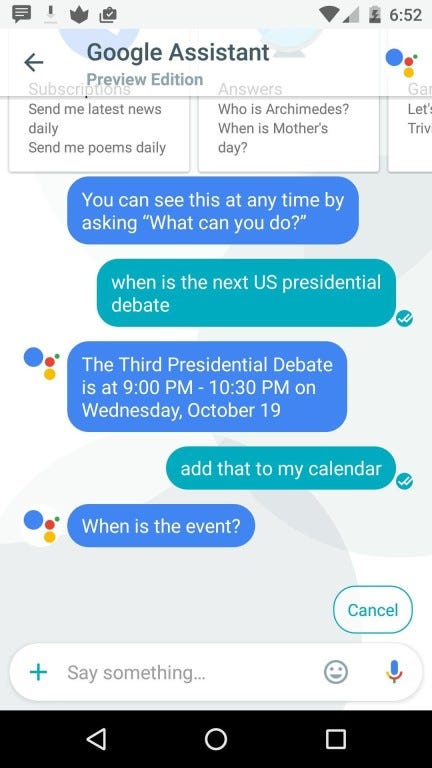

In Product Design, usability is associated with five main attributes — learnability, efficiency, memorability, errors and satisfaction. To date, there has been limited publicised usability research into prompt-driven AI. However, virtual assistants such as Google Assistant, Alexa and Siri have historically performed poorly in standardised usability tests due to a range of factors which include limited use cases, high error rates, poor integration with other applications, or basic use of contextual information (e.g current location, contacts, or past frequent locations).

When it comes to complex queries — virtual assistants struggle. Alexa is accurate 55% of the time, while Google Assistant leads the pack with 70% accuracy for complex queries.

This trend is also evidenced by the increasing number of online users sharing (very funny) examples of how virtual assistants are failing at the simplest of tasks and progressively getting dumber.

While Large Language Model smarts are improving our AI interactions through pre-trained knowledge and better natural language understanding — limited consideration has been given to the non-technical aspects of how users need to engage with these systems, and specifically the new requirements they place on users through prompting.

The Prompting Gap

Generative AI tools today rely on the specification of intent, with users only needing to tell the machine what they want to achieve as opposed to the steps to get there.

This works perfectly well on the assumption that intent exists — however, the reality is that users often don’t know what they are looking for.

Not only do users need intent to interact with the system — they need to be able to articulate it through prompts. This represents a significant shift in behavioural patterns — given 95% of a typical experience with a graphical interface today might be reading and engaging with visual cues and 5% writing and engaging with search. In a world of text-based prompt-driven AI, this figure could be closer to 50% visual and 50% writing.

My belief is that this will present critical usability challenges as the technology scales, given writing is significantly harder than reading. Simply put, it requires thinking and the need to articulate concepts, vocabulary, and grammatically correct sentences — in a world where people want to minimise cognitive effort and often don’t want to think.

This begs the question — does prompt-driven AI generate materially better outcomes for users with lower literacy compared to graphical interfaces? And what mechanisms can we deploy to help these users make advanced use of prompt-driven AI capabilities? The risk is that tools are built for the highly literate folk, in a world where over half the adult population in developed countries have the reading and writing ability of 9-12-year-olds.

Glimpses of the challenge ahead can be seen through the emergence of prompt marketplaces and online prompting courses that intermediate users’ experiences with these tools and capitalise on knowledge and literacy gaps.

With this in mind, how might prompt-driven AI need to evolve to improve usability? Three key shifts need to be accelerated to ensure users aren’t left behind.

- Multimodal interfaces

- Hybrid graphical and prompt-driven interfaces

- Generative interfaces

Shift 1: Multi-Modal

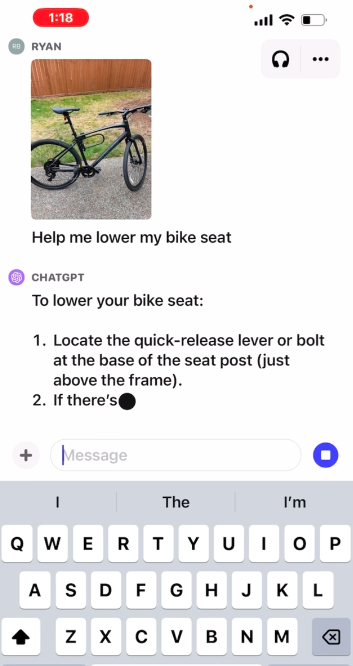

It should be no surprise that prompt-driven AI is evolving to allow users to input and output in a range of modalities. OpenAI recently announced ChatGPT’s introduction of voice chat, along with the ability to prompt by uploading images akin to Google Lens. This gives users the ability to take a photo and inject it into a prompt to better understand intent and contextualise responses.

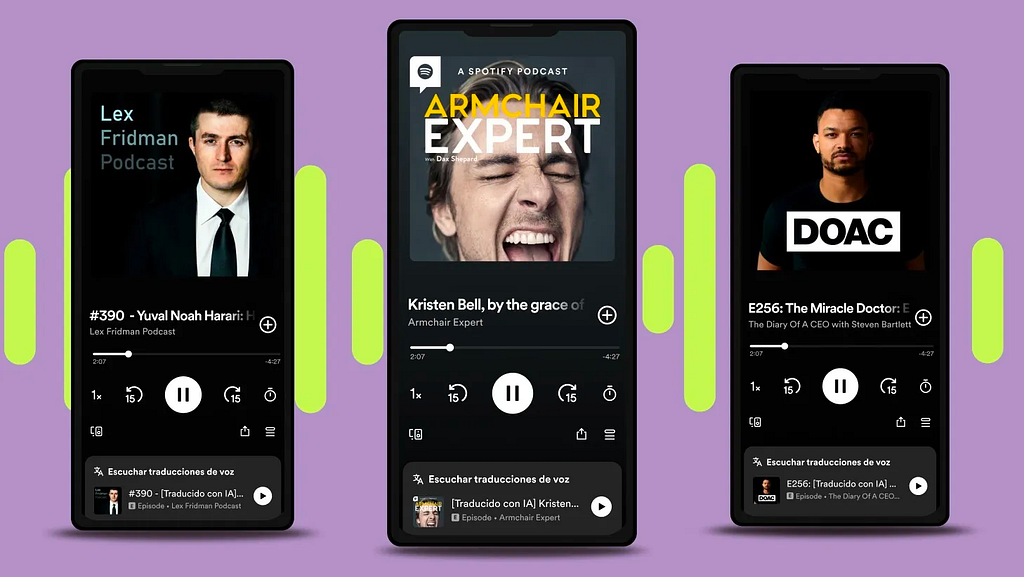

This shift is also evidenced by OpenAI’s recent partnership with Spotify and the testing of features to translate podcasts into other languages while retaining the sound of the podcaster’s voice.

In the future, there’s no doubt that these capabilities will extend to other modalities, such as video and gesture (e.g sign language, facial expressions, and body language) to better understand intent, context and deliver more personalised experiences.

Shift 2: Hybrid Interfaces

It’s likely we will increasingly see more and more graphical elements introduced into prompt-driven interfaces in a move away from chat boxes as the default.

This has strong parallels to the evolution of Google Search, which moved away from pure text and lists to a more sophisticated user interface with universal search tabs (2007), search suggestions (2008), knowledge panels and carousels (2012) and expandable ‘people also ask’ lists (2015). The ongoing development of these interactive elements enabled Google to bridge the human-machine gap, improve usability and the search experience by enabling users to complete tasks faster.

GrammerlyGo recently introduced a range of graphical and interactive elements with their Generative AI Co-pilot, which provides a glimpse of where this could be heading. These cover a range of use cases, from generating prompt ideas (e.g. “write a story), shortcuts (e.g “shorten it”) to selecting tone of voice through emojis to sound friendlier, more professional or more exciting.

Shift 3: Generative Interfaces

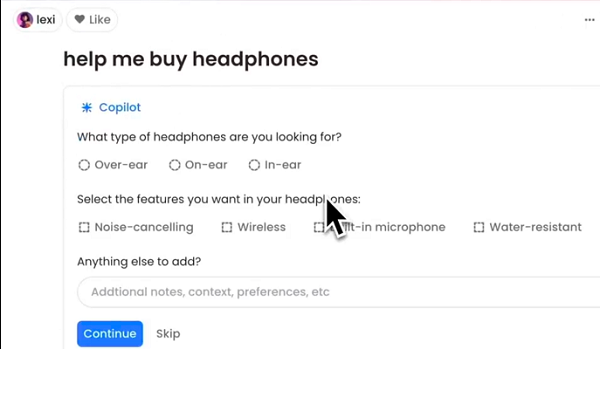

In addition to hybrid interfaces, we will begin to see the injection of generative elements contextualised based on user prompts. While this space is only just emerging, a great example is Perplexities recently launched co-pilot which leverages OpenAI’s GPT-4 model to collect interactive inputs based on user prompts.

This allows users to have a richer search experience by refining prompts based on context and removing the need for follow-up queries — in a similar way to the functionality offered by filters in traditional search.

Thinking of exploring prompt-driven AI?

- Back to basics: Start with user frictions and latent needs to avoid prompt-driven AI use cases and digital products without a compelling value proposition.

- Solution-agnostic: See through the hype and be open to solutions that aren’t built on GenAI or any type of technology. Challenge Design teams to explore policy, people or process changes that can solve the same problems.

- Question Conversational Design: Ask whether Conversational Design is the right model. Leverage Google’s Conversational Design Playbook to help.

- Understand literacy: Ensure literacy is explored in Discovery research and considered in the context of Usability Testing.

- Break the interface: Challenge Design teams to break the chat interface and explore hybrid and generative elements to improve usability if required.

The usability problem with prompt-driven AI was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.