These days, conversational agents seem to be serving up remarkably authentic responses alongside sophisticated recommendations, while also saving everyone time and money. Most people even seem to like them. So, what’s the catch — and how do we make them better?

Picture this: there is a magic worker in our midst, who can help you cut down by around a third on customer support costs, reduce response times significantly (replying 80% faster than live agents), provide a 24-hour service, and even handle entire conversations from start to finish. This efficiency and ease of use might be the hallmarks of rockstar chatbots that keep drumming up exposure alongside increasing adoption rates — and it’s hard to see the enthusiasm fading anytime soon.

No wonder it’s a thriving area then, with the global market predicted to reach $454.8 million in revenue by 2027, according to Statista’s wisdom. Though our conversational assistants are already quite good at their programmed Q&A besides dealing with admin tasks and bookings, the list gets longer. These multi-talented helpers also boast talent for generating leads and e-commerce transactions, with the latter expected to reach over no less than $142 billion within the next two years. Talk about a good investment.

The glaring caveat? It’s just not for everyone. Tech-savvy users might welcome it with keyboards at the ready, but some sources show that 60% of users still prefer to opt for more organic conversations, resorting to traditional methods. Perhaps once younger generations get older, a larger segment of the population will embrace chatbots over human contact when it comes to resolving queries. But we have to consider the target audience from an age and accessibility perspective, as well — and for both, additional fine-tuning might be required until AI fully masters the gift of gab.

Conversational agents can also be seen as sneaky middlemen, preventing access to live representatives, and less apt at solving complex issues. So, overall, it would appear that bots haven’t entirely taken over our hearts, earning undivided approval (yet).

Chatbots are becoming increasingly used in commercial contexts as well as healthcare, education and other sectors. In order to solve problems, or make product and service recommendations, they often collect personal information in a conversational manner. By conveying and employing human characteristics in their fine-tuned setup, it might not even be evident that we are interacting with a smooth-talking machine. This raises the question of how much we are ready to trust AI with our nitty-gritty details; and how aware we are when chatbots are sweeping up our data.

It’s one thing to let savvy algorithms fine-tune our playlists, so it’s truly music to our ears, but why are chatty robots now getting up close and personal with us, too? We don’t seem to mind the tech-wizardry getting done behind the curtains, but when we have to actively engage with it, this can trigger suspicions, raising our guard and doubts.

This isn’t just guesswork — findings confirmed that users are willing to disclose more information and spill the tea when they perceive chatbots as human-like. When charismatic AI gives advice (whilst sounding like a fellow mortal), chances are users also won’t be questioning it and worrying about privacy concerns or reliability as much as if it were coming from their machine-sounding counterparts.

All of this offers a great segue into anthropomorphism, which is the attribution of human traits, behaviours and emotions to non-human entities. Whether it’s done either intentionally or without realising it, embodying your bot with characteristics such as friendliness and charm can quickly become a double-edged sword, casting doubts around ethics.

It may well be best if businesses actively disclose that the user is interacting with a machine from the get-go. Participants in a Nielsen study were not only pleased, but also tailored their language to be more keyword-based and straight to the point, when they knew that they were chatting to AI.

Luckily, we also have a pretty good idea of what makes something appear as, you know, one of us. Research differentiates three types of cues that can convey humanness specifically in chatbots:

- visual cues (such as avatars with images of people),

- identity cues (think human names), and

- conversational cues, whereby the AI imitates human language (for example, by acknowledging responses).

Even if visual cues are not particularly high, interactivity and real-time conversations can compensate for the artificial nature of a faceless virtual assistant. In other words, you can balance out “lacking” elements with timely responses and more complex exchanges.

However, customers might also feel less keen on chatbots if the communication feels too impersonal and lifeless. For example, in a German user behaviour study, 52% of the respondents disliked human-machine interactions for this very reason. To this end, some researchers insist on incorporating warmth and empathy into chatbot discussions to improve their flow and overall experience.

Perhaps even more important is that chatbots are reliable and competent, as this significantly boosts customer satisfaction. Assurance in the form of courtesy, knowledge and confidence in their ability are also considered influential aspects. Users expect the same experience online as they would have in a face-to-face setting, so chatbots acting as replacements for human agents should be convincing in their performance, style and delivery.

What seems to matter a little less is how fast they respond, as users either seem to have lower expectations or believe that chatbots require time to comprehend their query. Nonetheless, once the reliability and assurance boxes are ticked, pumping up speediness further adds to the experience.

Finally, interactivity includes prompt reactions and problem-solving. These are essentially the cherries on top that can enhance or sustain the relationship between customers and the brand.

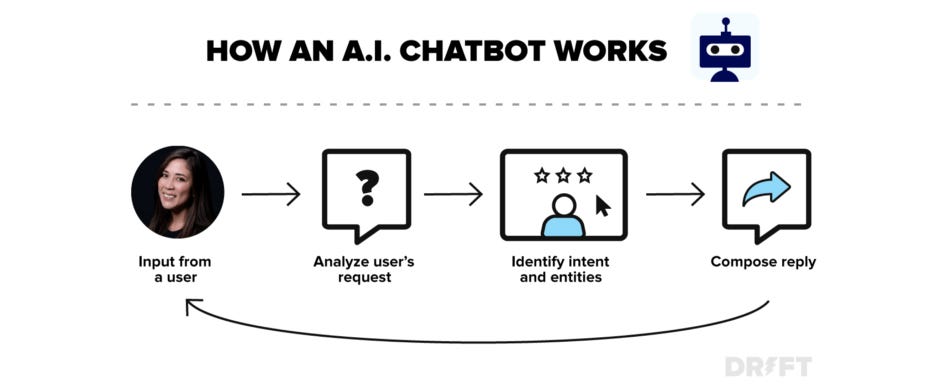

It would be poor form to omit how these on-demand gabbing machines actually do their thing, so it seems sensible to go through this now in broad strokes. Chatbot technology is powered by a branch of artificial intelligence called natural language processing (NLP), which is also the basis of voice recognition systems such as Apple’s Siri and Amazon’s Alexa.

When presented with text, the conversational assistant processes and analyses it, then filters for an appropriate response based on complex algorithms, allowing it to interpret what the user said (and meant). NLP is essentially what helps companies develop bots capable of discussions that are often indistinguishable from human agents.

The difficulty lies in, well, us. We can have an impressive variety of communicating or implying messages. Depending on our context, nuances, forms of speech and an array of other factors, what we say or write can have a machine-boggling number of interpretations. Hence, we basically hand these poor bots unstructured data, which can get super messy (especially if you throw metaphors, similes and other linguistic challenges into the mix).

Now that we’ve vaguely covered the underlying mechanisms, it’s time to turn to science for best practices around integrating our chatty helpers.

A very recent research paper reviewed and summarised guidelines for implementing chatbots with a focus on productivity at the forefront. By analysing existing studies, they split and mapped features into three categories: graphical user interface-related, features supporting the conversational process, and design elements of a humanised virtual assistant.

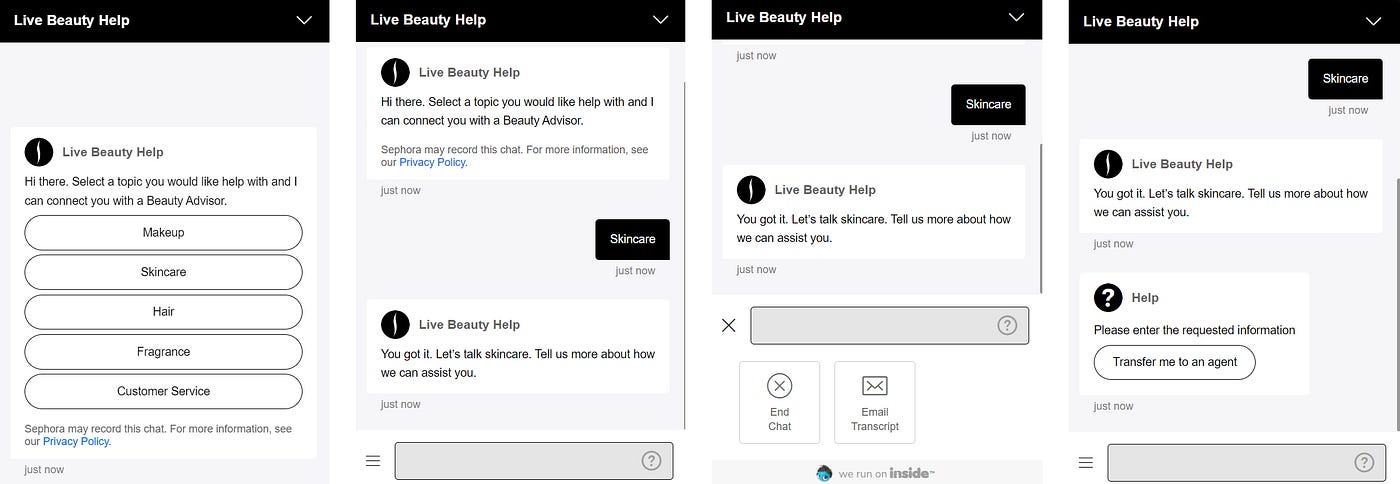

Kicking off with GUIs, optimising this part of the system can speed up the conversation by decreasing the input and effort required by the user.

The article details the following guidelines:

1) Present quick responses (e.g., buttons, carousels) for faster navigation and less typing effort by the users.

2) Provide conversational context through separate GUI elements (e.g., buttons, text fields) that allow manipulation of the context.

3) Provide dynamic quick responses taking into account the conversational context and showing the user possible next steps.

4) Use rich media elements such as images, GIFs and videos in the chat widget to convey information in a visual way.

5) Allow users to edit previous messages by clicking and altering them, thus possibly updating the current intent and the conversational context.

6) Decrease typing efforts by implementing a text prediction (auto-completion) feature providing word and intent suggestions.

7) Provide a persistent menu with chatbot capabilities that is always available.

To prevent users from abandoning the task and breaking off conversations, the below points are recommended

— albeit with the reasonable small print being that the exact effect will depend on the context:

8) Provide information about the conversational context in chatbot responses to bring the user and the chatbot on a common ground of understanding. (e.g., tell the current intent and entities the bot has identified).

9) Identify different signs indicating that the user might abandon the interaction soon (e.g., repeated reformulations) and provide alternatives (e.g., human handover).

10) In case of misunderstandings, provide the user the chatbot capabilities and the intents that have the highest probability based on the user’s input so far.

11) In case of misunderstandings, reflect the user message back to the user and highlight signal words (e.g., words that could not be understood or that led to a certain classification of input) and ask for reformulation of the request.

12) When starting the conversation, introduce the chatbot as artificial and provide the user the chatbot capabilities to help manage user expectations.

Finally, the authors of the article also touch upon the anthropomorphic design features of chatbots.

They also note that if they get overly friendly and engage in too much small talk, that might distract the user from completing the task at hand — so do try to strike a healthy balance.

13) Use social cues (e.g., emojis, response delays, name etc.) to achieve a certain level of humanness.

14) Choose a consistent conversation style that supports the context of task orientation.

Should you be craving more detail, the research paper is available via the link provided (with a lengthy list of references, if you want to dig deeper).

Despite the prior assumptions that chatbots cannot handle complex queries, their current (next-generation) iterations seem to be able to perform more advanced functions and personalised support. Not only are they becoming increasingly accepted, but now they are also often preferred over live support. They may be in luck — we are not exactly getting any more patient, and bots have the upper hand when it comes to speedy responses and resolutions.

Still, these eager machines are likelier to augment the work of support teams, rather than hoard and manage the load by themselves. The idea here is similar to other professional contexts: their role should be to deal with repetitive tasks and free up time, so that their human counterparts can oversee unique problems.

This would be the ideal scenario, but keep in mind that chatbots can also be used for more malicious purposes, exposing vulnerabilities and posing security risks. So, as always, try to look at shiny tech offerings through a well-balanced, layered lens, weighing up benefits as well as potential blind spots. The bottom line: not all that chats, helps — but that which does have pure (or at least neutral) intentions can offer some capital benefit to most, if not all, parties involved.